A Fast Flight of Small Language Models on a Vanilla Laptop

A simple comparison of fine-tuning times with the Phi-2 and OPT-350m language models.

Is smaller better? I can use my non-GPU-enhanced laptop to run or "inference" small Open-weights language models. I'm generally excited about the buzz around Phi-2 and other compact language models, and I've just started experimenting with them. An open question, however, was whether I could fine-tune those models on my laptop.

Consider some recent reading material:

Phi-2: The surprising power of small language models - from the Microsoft Research blog.

MobiLllama - from GitHub: MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT.

Earlier, I ran smaller Open-weights models on a vanilla laptop. I detailed my thoughts on context and inspiration and provided jump-off links on how I did it using WSL2:

Recently, I conducted a fine-tuning experiment on the same laptop (32 GB RAM, no GPU). I wanted to get a sense of performance. In this experiment, I tested two small language models:

Phi-2 model - available from Hugging Face - 2.7 billion parameters.

Meta AI’s OPT-350m - available from Hugging Face - 350 million parameters. Reference OPT: Open Pre-trained Transformer Language Models.

This experiment aimed to quantify roughly how long it took to fine-tune these models on my laptop using a limited dataset and the Hugging Face Transformer Reinforcement Learning (TRL) API. For information on TRL, consider the following link.

TRL - Transformer Reinforcement Learning API (Python).

While I found developing with the TRL simple, deciding on parameters consistent with my use of the TRL API and experiment models was time-consuming. Subtleties include deciding the relevance of parameters based on whether there is a GPU (not in this case) and whether quantization of model weights is used as a training optimization (not in this case). The test code below exhibits a minimal set of parameters that worked for me with both models. The model instances were obtained from the Hugging Face models archive.

The training data consisted of 1,200+ lines of JSON, as depicted in Figure 1. Each instruction-response entry (over 200) represents a short sentence of keywords from a paragraph from the following article and its summary in limerick form. ChatGPT generated both the keywords and limericks under the author's supervision. The total JSON file size was about 140KB of cleartext data.

ChatGPT and the Middlesex Fells Trail Analyzer | by Nate Combs | AI Advances (medium.com) - the source of the training data used in this experiment.

The code block below gives the core TRL routine used in this experiment. Switching between the OPT-350m and Phi-2 model was implemented by switching the base_model definition.

# Measure start time

start_time = time.time()

from transformers import AutoModelForCausalLM, AutoTokenizer

from datasets import load_dataset

from trl import SFTTrainer, DataCollatorForCompletionOnlyLM

data_files="train.jsonl"

# load jsonl dataset

dataset = load_dataset("json", data_files=data_files, split="train")

# Select base_model by the model to be used.

base_model = "facebook/opt-350m"

# base_model = "microsoft/phi-2"

model = AutoModelForCausalLM.from_pretrained(base_model, trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(base_model, trust_remote_code=True)

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

print("Base Model: {}".format(base_model))

def formatting_prompts_func(example):

output_texts = []

for i in range(len(example['instruction'])):

text = f"### Question: {example['instruction'][i]}\n ### Response: {example['response'][i]}"

output_texts.append(text)

return output_texts

response_template = " ### Response:"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)

trainer = SFTTrainer(

model,

train_dataset=dataset,

formatting_func=formatting_prompts_func,

data_collator=collator,

max_seq_length=2048,

packing=False,

)

trainer.train()

# Measure end time

end_time = time.time()

# Calculate the duration

duration = end_time - start_time

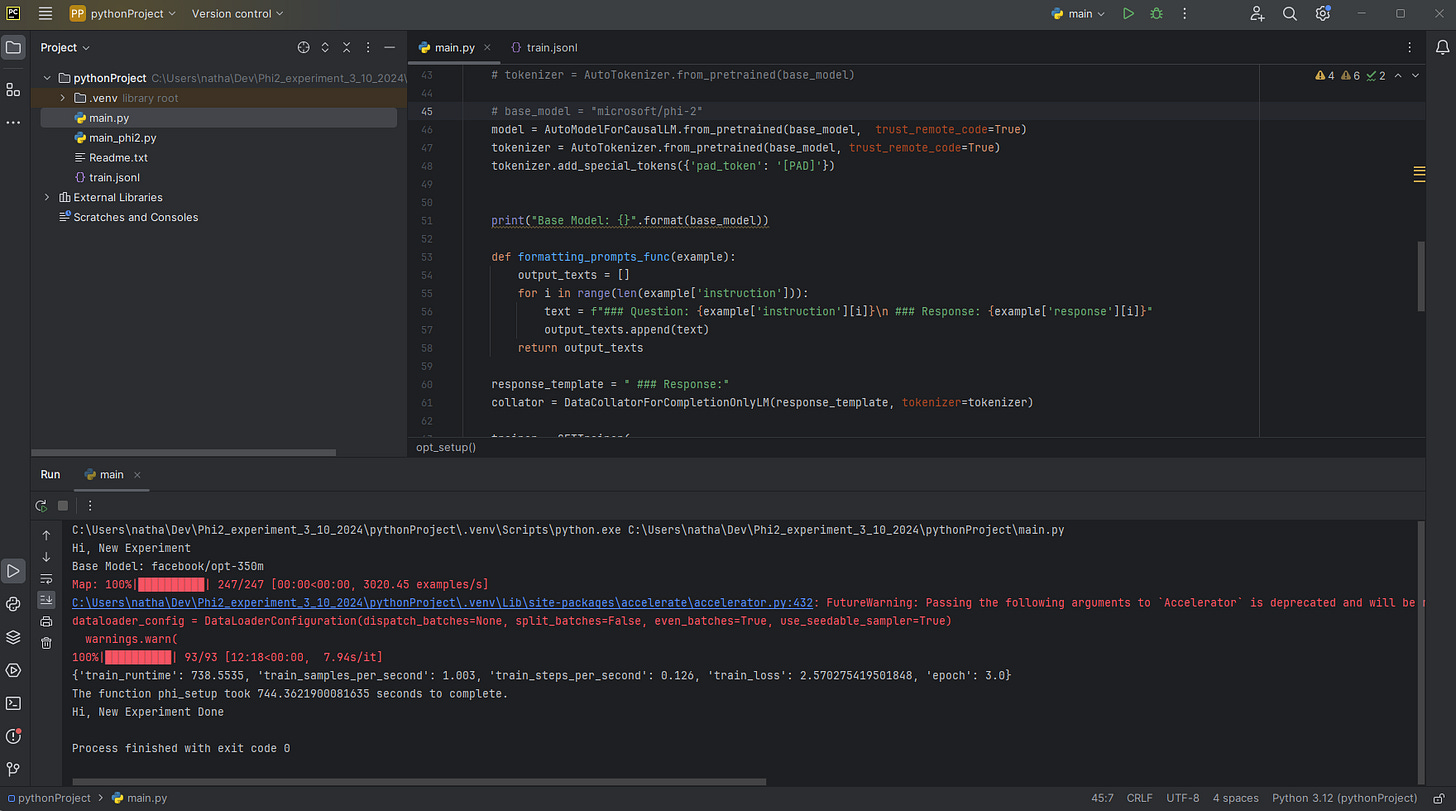

print(f"The function phi_setup took {duration} seconds to complete.")The TRL fine-tuning script was run in the Python terminal of a PyCharm Community Edition IDE. See Figure 2.

The IDE simplified managing the Python environment. I installed these Python modules:

pip install -U bitsandbytes

pip install -U transformers

pip install -U peft

pip install -U accelerate

pip install -U datasets

pip install -U trlI also needed to enable Developer mode (Windows), reference:

Enable your device for development - Microsoft Windows.

Discussion

Phi-2 model took about 400 seconds per training iteration. A minimal training cycle of 3 epochs / 90+ iterations would take ~10 hours.

Meta AI's OPT-350m took about 9 seconds per training iteration. A minimal training cycle of 3 epochs / 90+ iterations would take about 14 minutes.

The time it took Phi-2 to complete scaled at a faster-than-linear rate (# parameters) compared to OPT-350m. I am unsurprised as it seemed like that laptop needed to swap to slower memory more often with Phi-2 than with OPT-350m, an anecdotal observation.

Note that the above code sample does not use Parameter-Efficient Fine-Tuning (PEFT), another option compatible with the TRl API. PEFT limits fine-tuning changes to a small set of adapters used with the model. There are benefits to using PEFT, including memory and compute efficiency. My earlier training times can likely be improved.

The experiment was a success. I proved I could fine-tune Phi-2 on my laptop using the TRL API. It took a while to right-size the parameters that worked, but that was also a learning opportunity.

I'm considering more experimentation, specifically with the Phi-2 model. While I would not do that training on the laptop (I'm setting up an Amazon Web Services EC2 instance for that), I can at least prototype most of the code on the laptop.

References

TRL’s Supervised Fine-tuning Trainer—API documentation for the SFTTrainer used in this article’s code sample contains useful examples and explanations.

Load adapters with PEFT. Hugging Face documentation on the use of PEFT.

PEFT: Parameter-Efficient Fine-Tuning of Billion-Scale Models on Low-Resource Hardware. Hugging Face research blog description of PEFT.