Code, Tests, and Flashlights: Latest Notes from a Prolog Engine Experiment

Like a lion in winter, every failed test still hears the summer call.

This feels like a good moment to pause and share an update on my ongoing experiment: building a Python-based logic programming engine (a Prolog in miniature) designed for interactive fiction. The project is a collaboration between me, Claude Code, and GPT-5.

Alongside the technical work, I’ve been just as focused on the process itself—what it’s like to develop side-by-side with large language models. Test-driven development, continuous refactoring, and directed iteration have become the core of my methodology. In the last update, I introduced those practices. Looking forward, I want to demonstrate how they will be adapted and refined in practice with an LLM collaboration.

Kickoff and Early Momentum

The kick-off post from several weeks ago is given below.

Fly-By-Wire Coding with AI

Thanks for reading Nate’s AI-ish Substack! Subscribe for free to receive new posts and support my work.

The project has expanded significantly since then, fueled by the productivity of my LLM collaborators. I’ve experimented with this kind of collaboration for some time, but the arrival of the agentic Claude Code marked a turning point. Instead of the slower, cut-and-paste style of iteration I’d relied on before, Claude brought a step-change in speed and fluidity—enough to fundamentally shift the pace of development.

Alright, without further ado—

Progress is well underway. After three weeks of rapid development, there’s simply too much code to manage any way but “coding-by-wire.” Still, the focused cycle of iteration—Claude Code driving implementation, GPT-5 supplying targeted feedback—has steadily sharpened the test suite, increased coverage, and driven error counts down.

The Numbers

(Code statistics generated by Claude Code)

Updated KB Engine Project Statistics

Total Project Size

40,418 lines across 138 files (Python + Prolog)

By Area

Core KB Engine: 10,009 lines across 15 files

Test Coverage: 16,890 lines across 76 files

- 549 unit tests (functions starting with test_)

Knowledge Representation: 3,809 lines across 18 files

- Prolog files (.pl)

- YAML configuration files (.yml, .yaml)

Documentation: 27,150 lines across 33 files

- Markdown, text, and RST files

Key Metrics

- Test-to-Code Ratio: 1.69:1 (16,890 test lines / 10,009 core lines)

- Tests per Core File: 36.6 (549 tests / 15 core files)

- Average Core File Size: 667 lines

- Average Test File Size: 222 lines

Unit test pass/failure plot generated by GPT-5.

So where are we now?

The here, now, right now (aka status)

Earlier last week, in a conversation with GPT-5, we estimated the project was about 60–70% of the way to its first milestone: solving a classic Prolog problem (I’ll share more about that in a later post). I’ve included more from that exchange further down.

In truth, we may be even further along now—but the focus has shifted to hardening what’s already in place. The last few missing pieces remain on the horizon, but for now, stability and refinement take priority over new features.

That means we’ve been conducting extensive testing. Note in the graph above, there are now over 500 unit tests. In addition to increasing the number of tests, we’ve been reviewing and revising the test suite. It’s all good.

How is Claude Code doing?

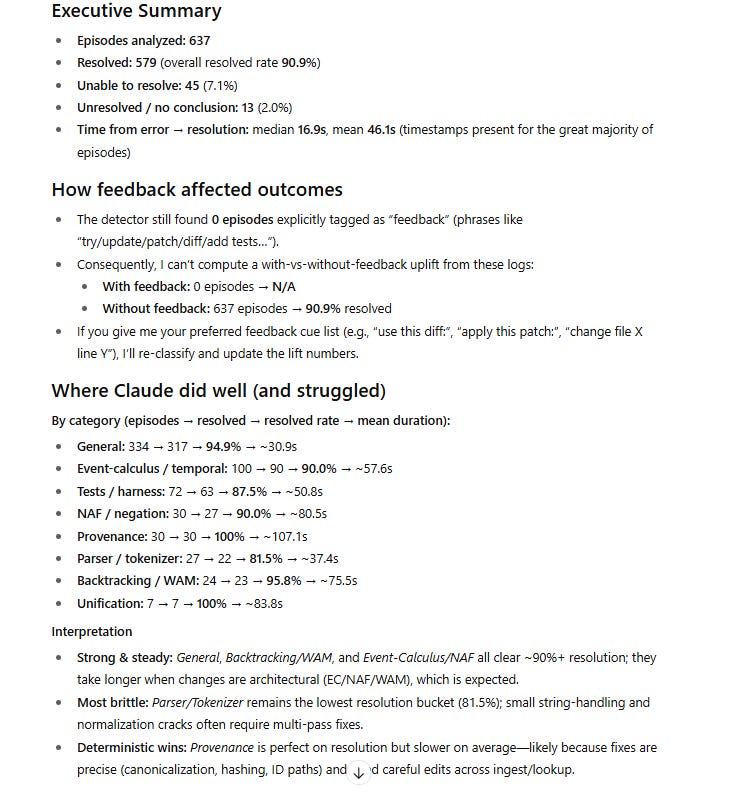

I also ran last week’s Claude Code session transcripts through GPT-5 and asked it to analyze how effective our interactions had been (see the figure below).

I then ran GPT-5’s analysis through Claude Code, which offered a number of minor corrections that don’t change the overall picture. The results matched my own impression: by and large, the sessions were effective and productive, with a steady rhythm of iteration and refinement.

The conclusions weren’t surprising, though I was delighted by the level of detail. The areas where Claude Code took the longest—and was least effective—were exactly where I’d already been leaning on GPT-5 for independent analysis: the hardest problems, for example, bugs with cross-cutting impacts that touched both domain predicates and the interpreter code.

So far, though, nothing has risen to the level of a showstopper. These issues simply demand more time and deeper analysis, not a change in direction.

In one of my chats, Claude Code provided concrete suggestions for how I could craft more effective debugging prompts (see below). I suspect there’s a lot of mileage to be gained from refining prompt strategy alone, and I’m looking forward to exploring that.

For example, I asked Claude Code: “How might you improve test understanding—and identify root causes more often?”

To improve test understanding and root cause identification, I should adopt a more systematic approach:

Better Test Analysis:

1. Read the full test first - Understand what behavior is being tested, not just where it fails

2. Trace the assertion backwards - Start from what's expected and work back through the test setup

3. Check test names and docstrings - They often reveal intent I miss when jumping straight to the error

Root Cause Identification:

1. Ask "why" multiple times:

- Test fails → Why? → Wrong result

- Wrong result → Why? → Incorrect evaluation

- Incorrect evaluation → Why? → Parser treating X as Y

- Parser issue → Why? → Tokenization boundary problem (root cause)

2. Look for patterns across failures:

- Multiple tests failing? Find the common code path

- Similar error messages? Likely same underlying issue

- Tests that used to pass? Check recent changes

3. Validate assumptions explicitly:

# Instead of assuming, add debug prints:

print(f"Token type: {token.type}, value: {repr(token.value)}")

print(f"AST node: {type(node).__name__}, children: {len(node.children)}")

4. Distinguish symptoms from causes:

- Symptom: "AttributeError: 'NoneType' has no attribute X"

- Better question: "Why is this None when the test expects an object?"

- Root cause: Often an earlier parsing/evaluation step failed silently

Practical changes I should make:

- Always read the test's setup/fixtures before looking at the failure

- Run tests with -vv flag more often to see detailed output

- Use debugger/breakpoints instead of just print statements

- Check if similar tests pass - they reveal what's different about the failing case

Finally, what follows are a few side topics that I began to explore in my Substack Notes.

Reflections on Testing and Attention

(Edited from here)

…The tests are in good shape. In the age of AI, they’re easy to maintain, revisit, and cull when better ones replace them. The hard part is growing an insightful suite—and more “eyes into the system” is almost always better than fewer.

The “architectural gaps” don't look too bad to me right now. They mostly cluster around edge cases with how the engine handles temporal logic in its Event Calculus. The gaps seem pretty arcane for my current use interests. Perhaps the LLMs may have leaned a bit too hard on research-paper patterns when prototyping the logic? I’ll keep an open mind, but I don’t want to get bogged down here unless it proves crucial in a way I’m not yet seeing.

The ever-growing number of unit tests reminds me of an old paper emphasizing the importance of "attention" in LLM problem-solving.

Attention Is All You Need: arxiv.org/abs/1706.03762

It seems to jive with what I've been observing in all this debugging and testing with the LLMs — running around with a flashlight and having the LLM fix things that fall within the light is pretty amazing. Because that is where it is paying attention to. However, asking it to imagine the parts out in the dark is something it’s less good at.

Seen that way, the unit tests are like sensors (or “transformers” of a sort). They transform what would be an open-ended, iterative problem-definition loop into a set of discrete checks, each illuminating a specific region of code and behavior. More sensors = more flashlights.

The theory would then be that with the right "system tests," I might be able to drive the LLMs to focus on the right code concerns to get at the "aha."

Milestone on the Horizon

(Edited from here)

I’m pushing to get the solver/engine to a point where it can execute a classic Logic Programming solution (more on that in a future post). This feels like a milestone.

To prepare, I shared both a standard Prolog solution and the engine’s full source code with GPT-5, asking:

“Read this code extremely carefully. How close is it to being able to interpret this solution as-is?”

GPT-5 identified several missing features that would be necessary for the engine to run the Prolog program out-of-the-box. The most notable omission: no support for lists ([H|T]).

Its verdict:

“As-is, you’re ~60–70% of the way there for this program: core parsing, disjunction, static facts, unification, and comparisons are in place.”

Lists aren’t essential for this particular program, though they’re quintessentially Prolog. The same solution could be rewritten in First-Order Logic form (still in Prolog syntax) and avoid lists entirely (spoiler alert!):

murderer(X) :-

contradictsPerson(X, Y1),

contradictsPerson(X, Y2),

Y1 \= Y2.

Next, I gave both GPT-5’s assessment and the Prolog solution to Claude Opus 4.1. Claude was a bit harsher, noting differences in backtracking and limitations in comparisons and negation. Its score:

“The 60–70% estimate seems reasonable, though I’d lower it slightly to 50–60% given these gaps.”

I’m curious how both models arrived at those numbers. If they are actually reasoning, expanding the engine’s feature set to match full Prolog semantics should raise those scores—I might be able to test that!

Finally, in one of my debates with GPT-5, it threw a few playful jabs at Claude: “I partly agree with Claude’s take—” Check out the note for the full context.

Finally

The engine isn’t finished, and we haven’t yet really dug into the interactive fiction parts. But like a lion in winter, it waits, hearing the call of milestones ahead.