Fly-By-Wire Coding with AI

What happens when Prolog, interactive fiction, and generative AI spin into 10,000 lines of code? Sounds like an experiment to me!

I’m in the middle of a fly-by-AI-wire software project of some complexity, and I’m loving every minute of it. From an engineering standpoint, it spooks me the way a good horror novel does—equal parts thrill and dread. But the insights are coming fast. What’s not to like?

The next section is a condensed version of a pair of notes I sent around last weekend, which introduce the project.

The final section is a few insights learned to date.

Over the weekend, I wrapped up a prototype: a collaborative Prolog-style logic solver featuring a “narrative provenance tracing” UX component. At its core, it’s an exhibition of Event Calculus in logic programming for interactive fiction. The UX isn’t fun yet—it’s still too predicate-heavy to feel like a game—but the underlying tech is exciting nonetheless. This is something I’ve wanted to hack on for ages but always shied away from—in the pre-generative AI era it would have been far too much work.

I pair-developed the prototype in just two days with Claude Code and GPT-5, entirely in Python. Along the way, I found myself reflecting on how different the human–AI workflow feels now. I wrote very few formal prompts in the traditional sense (i.e., a couple of years ago). Instead, I gave high-level direction—including ideas from GPT-5. GPT-5 handled the prompt-craft, Claude Code managed the implementation and DevOps, and I focused on staying one or two steps ahead, understanding what was being built, and nudging where needed. The dynamic has shifted from proofreading to directing.

That shift reminded me of another from years back: way back in school, I took a couple of seminars where I used Prolog (Programming in Logic) for assignments. Ever since, I’ve had a soft spot for it, sneaking it into the occasional hobby project. Recently, I got another chance—this time in the post-generative AI era. With help from Claude Code and GPT-5, the project grew far more substantial than my earlier forays. The AIs even talked me into learning a new trick. So, I spent last weekend brushing up on an old language with newer twists—and it was great fun.

Takeaway: AI, used well, can teach you new things, too.

This particular weekend project involved taking several mini-stories I’d posted on Substack while on vacation—playful fragments loosely based on a sci-fi novel I’m writing—and turning them into an ontology and knowledge base in Prolog. At its simplest:

From there, with the AIs, I built a lightweight inference engine to reason over this representation. Here’s where things got interesting: the AIs "learned"(were trained on) their Prolog long after I was, and they insisted on introducing me to Fluents in their Prolog.

Fluents are an extension that came along after my time with the language. They make it possible to compactly represent states and changes over time—something classic Prolog has always struggled with, often resorting to messy control tricks and backtracking hacks. With Fluents, you can encode dynamic conditions like “X holds at time T” in a clean, declarative way. It seems to be a great addition, and one that made me realize just how much the language has evolved since my hey-day with it.

So, now I know what my weekend will be about -- working through this new Prolog-Fluents world-view that the AIs are insisting on.

Links:

Prolog - Wikipedia: en.wikipedia.org/wiki/P…

Fluents: en.wikipedia.org/wiki/F…

Mini-stories:

Vacancy.exe (mini-story): natecombs.substack.com/…

Friends in Low Places (mini-story): natecombs.substack.com/…

Kerosene (mini-story): natecombs.substack.com/…

The insights, so far—

While the pace of development has been staggering (nearly 10,000 lines of code, predicates, and ontological material in a week), powered by Claude Code and GPT-5, it’s interesting for precisely that reason. It feels like software development-by-wire, and it’s scary — too much code in too little time to directly understand. However, because I’m constantly analyzing the code with the AIs. I have their understanding.

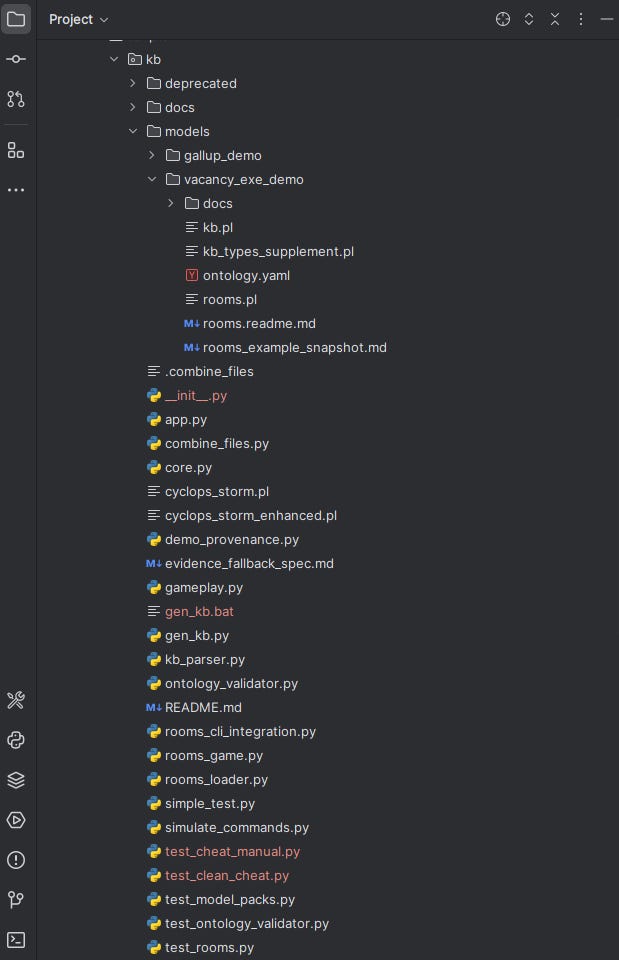

Below is a snapshot of the current repo, whose contents form the ground floor of their understanding:

I hesitate to call this full-throated vibe-coding, because the approach is deliberate rather than impulsive. I typically involve two—sometimes three—LLMs alongside Claude Code, iterating at length before deciding to act. Even in the simplest case, GPT-5 reviews my inputs and drafts the prompt, adding useful detail that I then proofread before passing it on to Claude Code. For more complex tasks, this process typically undergoes multiple rounds of refinement.

The external LLMs help craft/verify/double-check the design, algorithm, and code specification—Claude Code does the coding, but the prompt crafted by the other LLMs will shape what that code will look like.

I believe this level of “triple-and-double checking deliberateness” has made the process go extraordinarily smoothly so far. I have not had to spend any long nights debugging complex code written by AI, even after several major code refactorings. But that is likely not the whole story.

Claude Code also deserves credit here. It quietly takes care of the smaller, nuisance-level errors that used to cause endless mischief. It not only finds and auto-repairs many of them on the fly, but also generates a good basic set of unit tests, runs them, and patches breakages without ever needing to involve me.

However, the scary part still remains. While the solver and game UX both pass unit tests and my limited manual walkthroughs, these are still narrow glimpses into a complex implementation—one with very little boilerplate code.

So, beyond the interactive fiction designs to try out, a real challenge will be to figure out how to slice into the system with advanced logic and testing that will give me real confidence these ten thousand lines of code are doing what my AIs claim they’re doing.

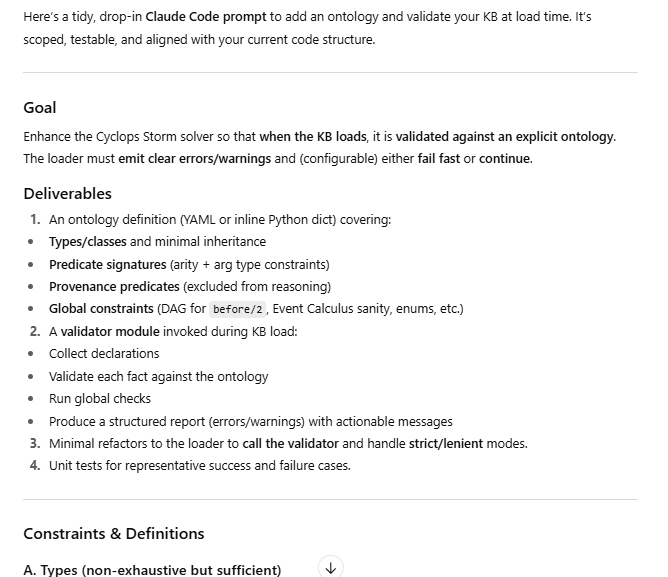

For example, GPT-5 and I have been working on a runtime ontological verification of the knowledge base, making sure all thousands of predicates and facts conform to a standard model. A prototype is in place—seeded by a GPT-5 prompt to Claude Code—but it still needs significant refinement:

I haven’t yet seriously thought about how to test the fluents in conjunction with the current knowledge system and the solver implementation. That will be a major headache—but in a good way.

The scary part—that I’m flying a system I don’t fully “see inside”—is also the interesting challenge. It forces me to think not just about what the code is, but how we can slice, test, verify, and prove its behavior. That’s the challenge ahead.

For now, the engine runs, the knowledge base grows, and I’m still grinning like a kid who just unlocked a secret level, and the horror-novel suspense is real.

Updates: https://substack.com/@natecombsai/note/c-148590740

The title is a play on "fly-by-wire" https://en.wikipedia.org/wiki/Fly-by-wire