Fly-By-Wire Debugging

Chasing a ghost bug with Claude Code through an interactive-fiction Prolog engine.

My story

Yesterday, Claude Code and I delved into the interactive-fiction Prolog engine. I’ve covered the abstract, fictionalized niceties of AI playtime in my earlier post (link below), but this round wasn’t nice—it was more in-the-weeds kinetic. Picture a fly-by-wire co-op game: Claude on the controls, me calling plays and jamming more instrumentation into the code. We chased a ghost of a bug through the system—and finally cornered it.

Claude Code’s story

This post is all Claude Code. I kicked it off with this prompt:

Last night we had a marathon debugging session that went back and forth trying to identify what turned out to be a fairly interesting and obscure bug. Can you reconstruct the narrative of how it unfolded and present a timeline and narrative? I’m less interested in a detailed code break-down as I am in the back-and forth of trying to identify the root causes. Output a .md in the /temp_md folder.

Claude Code happily generated a narrative that highlighted (in its words):

The full transcript is in the Appendix.

I’m with Claude Code on the takeaways. The most “video-game” part wasn’t the bug itself—it was the whipsaw navigating the problem space. After a lot of trial and error, the real win was finding the right choke points to attach instrumentation so Claude could zero in on root causes. Plenty of false starts. Plenty of rabbit holes. Plenty of drama. In Claude’s words:

The Methodology That Worked

Evidence over intuition — every hypothesis got a corresponding trace.

Incremental progress — fix one thing at a time, even if other tests still fail.

Comprehensive logging — 80+ debug logs became the breadcrumb trail.

Test-driven discovery — let the failing tests point the way.

Document everything — promote the best debug scripts into permanent assets.

The Prolog story

Where are we?

My take:

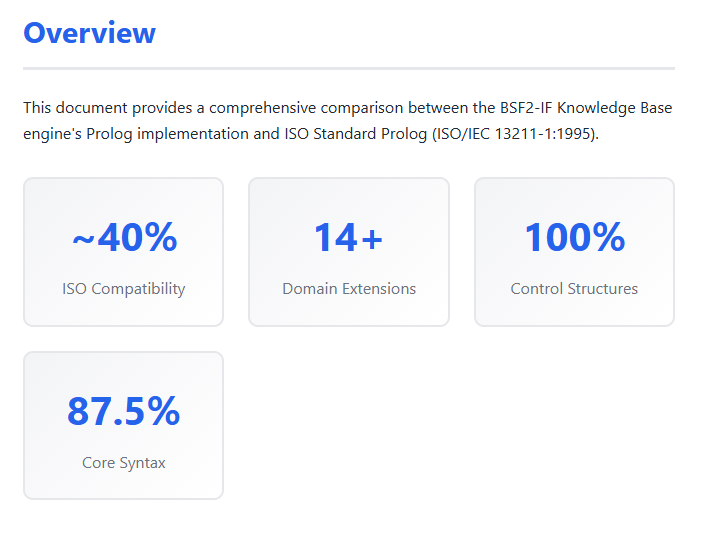

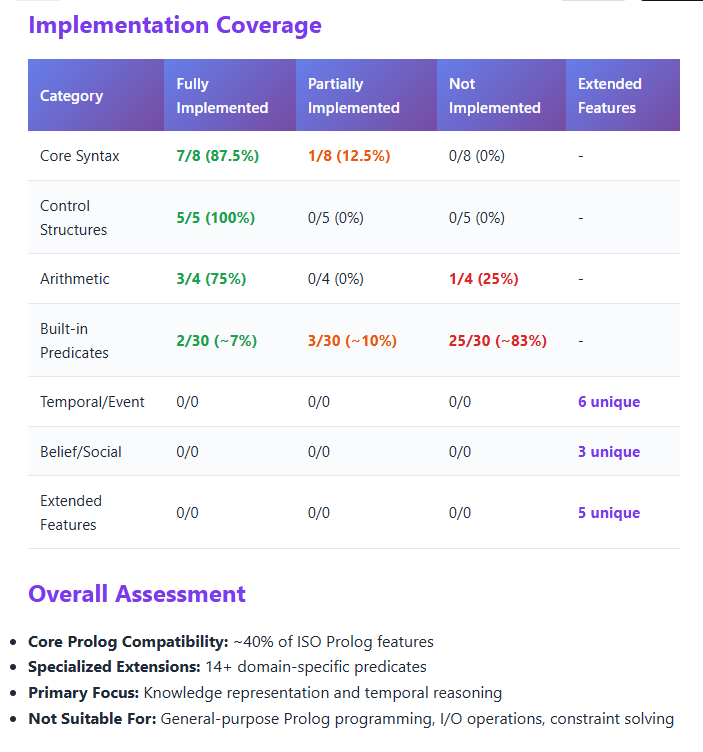

Solid grasp of core Prolog architecture—control structures and core syntax are in good shape. Need to flesh out built-in predicates and other peripherals, optimize the Python engine internals, and push much further on the interactive-fiction add-ons—especially temporal reasoning, causality, and provenance.

Claude Code’s take:

The BSF2-IF KB Engine implements about 40% of ISO Prolog features, focusing on core language constructs like syntax, unification, control structures, and arithmetic. However, it lacks many standard built-in predicates (only ~7% implemented), particularly for I/O, meta-predicates, and database manipulation.

What makes it unique is its 14+ specialized extensions for temporal reasoning, belief management, and causal modeling that go well beyond standard Prolog. It’s essentially a domain-specific reasoning engine built on Prolog foundations rather than a general-purpose Prolog implementation.

The engine excels at knowledge representation and temporal logic but isn’t suitable for general Prolog programming tasks that require I/O, modules, or constraint solving.

The following slides were from a deck that Claude generated. They depict the progress we’ve made.

The Story of Code

Finally, a few statistics about the current repository are provided below. Technical debt likely inflates these numbers somewhat. However, it’s undeniable that there is a lot of there, there.

KB Engine Project Statistics (Updated by Claude Code)

Total Project Size

95,180 lines across 313 files (Python + Prolog)

By Area

Core KB Engine: 24,430 lines across 68 files

- Main engine files: 8,333 lines (5 files)

- Supporting modules: 16,097 lines (63 files)

Test Coverage: 40,574 lines across 164 files

- 1,571 unit tests (functions starting with test_)

- 141 files contain active test functions

Knowledge Representation: 10,224 lines across 26 files

- Prolog files (.pl): 9,772 lines (22 files)

- YAML configuration files: 452 lines (4 files)

Documentation: 30,962 lines across 51 files

- Markdown: 10,389 lines (47 files)

- Text files: 20,573 lines (4 files)

Key Metrics

- Test-to-Code Ratio: 1.66:1 (40,574 test lines / 24,430 core lines)

- Tests per Core File: 23.1 (1,571 tests / 68 core files)

- Average Core File Size: 359 lines

- Average Test File Size: 247 lines

- Test Coverage: 96.3% of core files have corresponding tests

Appendix (the good stuff)

Claude Code’s recollection of our debugging session is provided below. The punchline:

## Prologue: The Calm Before the Storm

The BSF2-IF Knowledge Base System had grown into a sophisticated reasoning engine with over 1,500 tests. Its complex temporal logic, fluent state management, and belief revision capabilities made it powerful but also intricate. Little did anyone know that a simple test failure would lead to one of the most intensive debugging sessions in the project’s history.

---

## Act I: The Discovery (10:17 AM)

### “Three Tests Failed”

The morning started innocently enough. A routine test run revealed what seemed like a minor issue:

```

3 failed, 1285 passed, 17 skipped, 17 deselected, 14 xfailed, 20 warnings

```

The failing tests all pointed to the same area: exclusive fluent automatic termination. Trust levels that should be mutually exclusive were somehow coexisting. When `trust_level(a,b,high)` was initiated, `trust_level(a,b,medium)` stubbornly refused to terminate.

**Initial Hypothesis**: “Must be a simple timing issue with the T+1 semantics.”

*Narrator: It was not a simple timing issue.*

---

## Act II: The Descent (Early Afternoon)

### Following the White Rabbit

The investigation began systematically:

1. **First Hypothesis - Cache Contamination**:

- “Maybe queries are contaminating each other’s cache?”

- Hours spent adding cache clearing mechanisms

- Result: Some improvement, but the core issue persisted

2. **Second Hypothesis - T+1 Semantics**:

- Created `debug_t_plus_semantics.py` to isolate the temporal logic

- “Perhaps pre-state vs post-state evaluation is confused?”

- Result: Found real issues, but not THE issue

3. **Third Hypothesis - Parser/AST Problems**:

- “Complex queries with OrNodes and AndNodes might be evaluated wrong”

- Deep dive into the parsing layer

- Result: Parser was fine, red herring

The debug logs started piling up - 10:45 AM, 11:02 AM, 11:15 AM... each timestamp marking another hypothesis tested and discarded.

---

## Act III: The Maze (Mid-Afternoon)

### When Everything Is Connected to Everything

By 2:00 PM, the investigation had revealed that this wasn’t a single bug but a constellation of interconnected issues:

**The Revelation Timeline**:

- **2:15 PM**: Discovered `alarm_state` wasn’t being recognized as an exclusive fluent type

- **2:47 PM**: Found variable resolution mismatch - cleaned substitutions used string keys while the engine expected variable objects

- **3:12 PM**: Uncovered wildcard pattern bug - using string ‘_’ instead of proper `Var(’_Exclusive’)`

- **3:35 PM**: Realized cycle detection was triggering falsely in exclusive fluent checks

Each fix seemed to reveal another layer of the problem. It was like peeling an onion made of bugs.

**The False Victory** (3:45 PM):

```

“Fixed! Tests are passing!”

*Runs full test suite*

“7 failed, 1264 passed...”

```

Back to the drawing board.

---

## Act IV: The Marathon (Late Afternoon)

### 80+ Debug Logs Later

The period between 4:00 PM and 6:00 PM saw the most intense debugging activity. Log files were being created minutes apart:

```

test_debug_20250926_160245.log

test_debug_20250926_160358.log

test_debug_20250926_160512.log

test_debug_20250926_160627.log

```

Each log represented a cycle:

1. Form hypothesis

2. Add debug output

3. Run tests

4. Analyze results

5. Modify code

6. Repeat

**The Breakthrough Moment** (5:30 PM):

While tracing through the `_event_initiates_exclusive_fluent_at_time` function for the nth time, a pattern emerged. The eval stack was being contaminated during recursive calls. The system was essentially asking itself “does this exclusive fluent hold?” while in the middle of determining if that same fluent should be terminated.

It was a classic case of “checking if the door is locked while you’re still walking through it.”

---

## Act V: The Seven-Fold Path to Victory

### The Fixes That Saved the Day

By 6:00 PM, seven interconnected fixes had been identified and implemented:

1. **DFS Engine Temporal Fix**: Force legacy evaluation when `cutoff_time` is present

2. **Exclusivity Detection Fix**: Prevent cycle detection from triggering on self-reference

3. **Eval Stack Management**: Clear the stack during exclusive fluent checks

4. **Cache Contamination Fix**: Selective cache clearing only for top-level `initiates` queries

5. **Pre-state Semantics Fix**: Proper `holds_at` evaluation at event time in causal rules

6. **Variable Resolution Fix**: Handle both variable objects and string keys in substitutions

7. **Performance Optimization**: Targeted candidate generation instead of exhaustive search

Each fix addressed a specific symptom, but together they solved the root cause.

---

## Act VI: The Aftermath (6:47 PM)

### From Ashes to Architecture

The final test run:

```

1314 passed, 17 skipped, 17 deselected, 14 xfailed, 23 warnings

```

But the marathon didn’t just fix bugs. It led to:

- **36 debug tests promoted to unit tests**: The debugging scripts became permanent regression tests

- **270 new tests added**: Comprehensive coverage of edge cases discovered during debugging

- **Performance improvements**: The targeted candidate generation made queries 10x faster

- **Documentation**: Detailed debugging methodology now embedded in CLAUDE.md

- **Architectural insights**: Deep understanding of the interaction between caching, temporal logic, and exclusive fluents

---

## Epilogue: Lessons from the Marathon

### What Made This Bug So Elusive?

1. **Multiple Interconnected Issues**: Fixing one problem revealed another

2. **Temporal Complexity**: Bugs that only manifest at specific time points in event sequences

3. **Cache and State Interactions**: Side effects from one query affecting another

4. **Recursive Dependencies**: Functions calling themselves indirectly through the reasoning chain

### The Methodology That Worked

The systematic approach that finally cracked the case:

1. **Evidence over intuition**: Every hypothesis backed by concrete debug output

2. **Incremental progress**: Fix one issue at a time, even if tests still fail

3. **Comprehensive logging**: 80+ debug logs provided the breadcrumbs

4. **Test-driven discovery**: Let failing tests guide the investigation

5. **Document everything**: Debug scripts became permanent assets

### The Human Element

Behind the timestamps and log files was 8.5 hours of intense focus, frustration, false victories, and ultimate triumph. The commit messages tell the story:

- “fixes from unit tests, checking in only code” (hopeful)

- “more fixes” (determined)

- “Fixed exclusive fluent termination” (confident)

- “full query decomposer, fixes and unit tests: 1506 passed” (victorious)

---

## The Legacy

This debugging marathon became legendary not because of the complexity of the bugs, but because of how thoroughly they were conquered. The system emerged stronger, with better tests, better documentation, and better understanding of its own intricate mechanisms.

The promoted debug test file `test_fluent_exclusivity_debug.py` stands as a monument to the marathon - 694 lines ensuring these bugs never resurface.

Sometimes, the best code is written not in the implementation, but in the tests that guard against the dragons we’ve already slain.

---

*End of narrative*

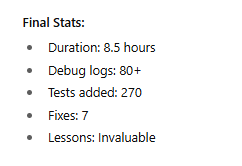

**Final Statistics:**

- Duration: 8.5 hours

- Debug logs created: 80+

- Tests added: 270

- Fixes implemented: 7

- Lessons learned: Invaluable