From Code to Context And Back Again

Scaffolding Story Beats with Agentic AI

Have you noticed how “writing code” just isn’t what it used to be? Or is it just me? In the age of AI, the very definition of code seems to be morphing before my eyes. During my Bad Science Fiction 1.0 sprint, I ended up juggling seven GitHub repos—packed with all sorts of scripts, proof-of-concepts, and experimental pipelines that my “AI consultants” and I worked with. I keep them alive not because their code is useful in themselves, but because they form my personal “context library”—a sandbox of Context Engineering artifacts I can always return to. (More about this in a minute) And here’s the surprise: I find myself captivated far more by the context-engineering artifacts—the prompts that drive these models—than by the code itself.

I don’t seem to be the only one. As Laura Tacho suggests, AI has made many forms of coding almost trivial; the real skill increasingly lies in AI wrangling. In other words, we’re shifting from building logic to sculpting problems and context. That’s what it feels like to me, anyway.

In my recent posts, I’ve been exploring agentic coding—a shift that’s reshaping how I am thinking about AI/development. (See here and here).

Beyond my AI coding experiments, I also work with science fiction manuscripts—both large and small—and I often utilize large language models to help analyze them. One of my favorite uses is spotting logic inconsistencies (I’ve written a bit about that here). LLMs are great for this, but when it comes to breaking down and analyzing story beats in a fine-grained way, they often overlook the details: when I feed them a 25K+ word document and get back a thorough-looking report, I can’t shake the feeling that they might not have truly examined everything. It’s probably just like us, we latch on to the big things and “yeah, yeah” our way past what seems like the minor stuff.

This brings me to the motivation behind today’s post: I wanted to quickly develop a “scaffolding” tool—a script that can extract the low-level story beats from a manuscript and perform a rapid analysis of their semantic similarity (using embeddings). I’ve done plenty of work like this before, so I already have a lot of context to build on. The goal here is simple: a lightweight script for quick analysis—something I can review myself and also use as input for another AI prompt.

Working with AI—using the approach I outlined here (Claude Code + reasoning AIs)—we quickly produced about 600 lines of Python that implemented the following algorithm. (I asked Claude to summarize the code in two paragraphs.)

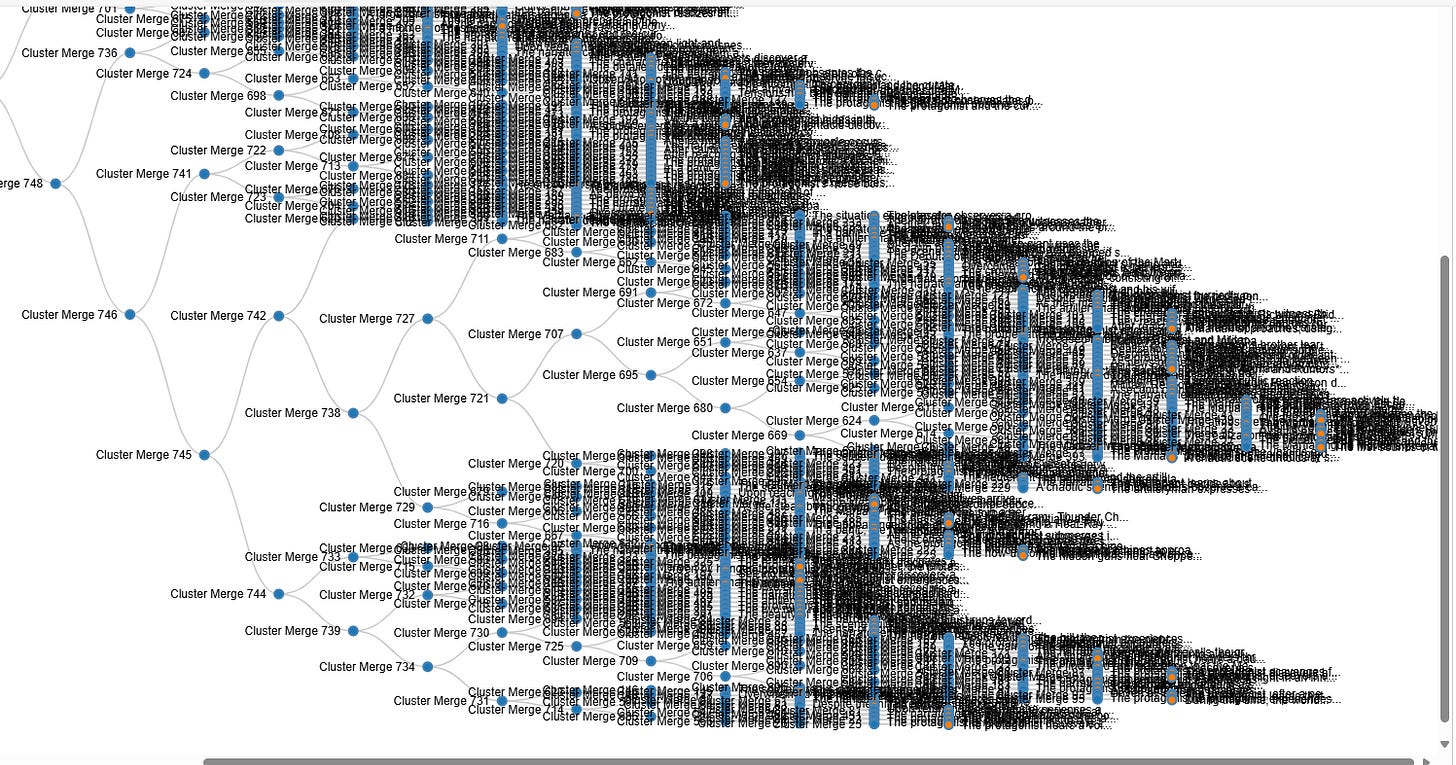

It generates an exhaustive list of low-level story beats and their hierarchical relationships (for example, a 3MB HTML/text file representing H.G. Wells’ War of the Worlds, available on Open Source). It also includes a simple JavaScript viewer attached to the text report, giving me a quick and easy way to browse the data. See the figure below.

Now we reach the core of this post. The prototype code was trivially generated and executed flawlessly using the process I described earlier. Most of the effort went into crafting the initial prompt that Claude Code used to produce the first version of the script. I spent about forty minutes refining it with the help of OpenAI’s o4 and o3 reasoning models—my “AI consultants”—packing in as much relevant context as I could think of at the time. Below is the initial prompt - it’s packed with detail and references that my AIs gleaned from my Bad Science Fiction experiment repositories, which Claude Code leveraged.

You are **CodeSmith**, an expert Python developer versed in OpenAI’s Python SDK, semantic embeddings, and the StoryDirector architecture. Generate a new module named `story_scaffold.py` implementing the four functions below, using the provided `config.py` and examples as guidance. When the program runs, it must write two files:

* `scaffold_<YYYYMMDD_HHMMSS>.yaml` — containing the raw, per-window events

* `scaffold_<YYYYMMDD_HHMMSS>.html` — rendering those raw events alongside the final, consolidated events

**A. Setup & Dependencies**

* Python ≥3.8

* Top-of-file imports: `os`, `yaml`, `openai`, `logging`, `numpy as np`, plus any from `langchain` (for embeddings)

* Configure `logging` with both `StreamHandler()` and `FileHandler("logging_output.txt")`

* Use `config.py`’s `create_openai_client()` to obtain `client`

**B. Helper**

```python

def _cosine_similarity(vec1: List[float], vec2: List[float]) -> float:

a, b = np.array(vec1, float), np.array(vec2, float)

na, nb = np.linalg.norm(a), np.linalg.norm(b)

return 0.0 if na == 0 or nb == 0 else float(np.dot(a, b) / (na * nb))

```

**C. Function Specifications**

1. **extract\_raw\_windows(path: str, window\_size: int = 500, stride: int = 250) → List\[str]**

* Read the UTF-8 text file at `path`.

* Split into overlapping sliding windows of **words**, each of size `window_size`, advancing by `stride`.

* On I/O error, log and raise or return `[]`.

* Return ordered list of window strings.

2. **embed\_windows(windows: List\[str], client: OpenAI, batch\_size: int = 16) → List\[Tuple\[str, List\[float]]]**

* Batch `windows` in groups up to `batch_size`.

* For each batch, initialize `OpenAIEmbeddings` via LangChain using the same key & model as in `config.py`.

* Call `.embed_documents(batch)` (or similar), catching API errors: log to `logging_output.txt` and skip failed batch.

* Return the full list of `(window_text, embedding_vector)` tuples in the original order.

3. **extract\_events\_from\_windows(windows: List\[str], client: OpenAI, model: str = "gpt-4o-mini") → List\[List\[str]]**

* For each window, call the LLM with:

```python

system: "You are a story beat extractor. Given a slice of narrative, identify its 1–3 key story events."

user: "<window_text>"

```

* Parse the model’s plaintext or JSON reply into a `List[str]`.

* On parse or API failure, log and return `[]` for that window.

* Return a list of these event-lists, aligned with `windows`.

4. **merge\_similar\_events(all\_window\_events: List\[List\[str]], embeddings: List\[List\[float]], similarity\_threshold: float = 0.8) → List\[str]**

* Flatten `all_window_events` into a single list of `(event_text, embedding)` in their original order.

* Greedy-cluster by cosine similarity ≥ `threshold`:

* For each `(text, emb)`, compare to each existing cluster centroid (mean of its embeddings).

* If any ≥ threshold, append to that cluster and update its centroid; else start a new cluster.

* For each cluster, join member texts with `" | "` to produce one consolidated description.

* Return the list of consolidated event descriptions, in cluster-creation order.

**D. File Outputs & Usage Example**

At the end of `story_scaffold.py`, include:

```python

if __name__ == "__main__":

client = create_openai_client()

windows = extract_raw_windows("storyline.txt", window_size=500, stride=250)

raw_pairs = embed_windows(windows, client)

window_events = extract_events_from_windows(windows, client)

embeddings = [emb for _, emb in raw_pairs]

consolidated = merge_similar_events(window_events, embeddings, similarity_threshold=0.85)

# Write YAML and HTML outputs

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

yaml_file = f"scaffold_{timestamp}.yaml"

html_file = f"scaffold_{timestamp}.html"

# (serialize raw window_events to YAML, then generate an HTML page highlighting consolidated events)

print(f"YAML output: {yaml_file}")

print(f"HTML output: {html_file}")

```

**E. Documentation & Style**

* Google-style docstrings with full type hints on every function

* Robust `try/except` around all file I/O, embedding, and LLM calls, using `logger.error(..., exc_info=True)`

* No Markdown fences—output must be valid, directly runnable Python code only.

I iterated the above prompt with my AI consultants several times to get it just right — the right level of detail and completeness, and without inconsistencies. I then fed the prompt to Claude Code — it generated the Python code that worked as intended without modification — about 500 lines worth.

From there, I went through a process of script tweaking—letting Claude Code handle the actual coding while my AI consultants helped craft the prompts. Claude Code runs on the Claude Sonnet 4 model, a powerful reasoning LLM, and sure, I could have skipped the middle step and fed my requests directly to it. But I prefer the two-step process. It creates a clean separation of concerns: I can review and sanity-check each detailed prompt before it is submitted, and Claude Code receives a clearer, more structured set of instructions to work with.

I broke my goals down into a series of small, focused prompts that my AI consultants translated into a more contextualized prompt. We were making incremental updates to the code each time. Could I have rolled everything into one massive prompt and aimed for a big-bang update? Sure. However, analogous to human development, I find that decomposing big tasks into smaller, iterative ones is far more effective with coding AIs. Everything went off without a hitch. Below is the list of “mini-prompts” I used to refine that first implementation. We ended up with over 600 lines of code.

Here are the mini-prompts.

1. Please write a prompt for Claude Code instructing it to update the following code so that it outputs in the HTML file a hierarchical roll-up of the raw events into the top level event categories.

2. Please write a prompt for Claude Code that will depict the order of roll-up of the raw of events under each consolidated category.

3. Can you write a prompt for Claude Code using the updated code to use hierarchical agglomerative clustering (HAC) to roll-up events into categories. The hierarchy should be represented in the HTML output.

4. Can you write a prompt for Claude Code that requests that the HTML output includes a javascript script to depict the HAC results in an interactive hierarchical graph. The graph should depict the merging process hierarchically. The user should be able to mouse over leaf nodes to see the raw events.

5. Can you write a prompt for Claude Code that requests that the javascript hierarchical graph display be scrollable on a larger display surface. Right now it is too crowded into a small display area.

6. Can you write a prompt for Claude Code that requests that the intermediate nodes in the javascript hierarchical graph will pop-up the rolled up events below it with a mouse-over? Right now, only the leaf nodes will display their raw event text.Below is an example output from o4 LLM (OpenAI) — one of my “AI consultants.” It is a more detailed and contextualized translation of my #5 prompt (above).

You are **CodeSmith**, an expert Python developer. The interactive D3.js hierarchical graph in your generated HTML is currently cramped into a small fixed area. Update `story_scaffold.py` so that the graph is rendered in a **scrollable**, **larger** display surface:

1. **Scrollable Container**

* Wrap the `<svg>` for the D3 tree inside a `<div id="tree-container">` (or its parent) styled with:

```css

#tree-container {

width: 100%;

height: 800px; /* or another generous height */

overflow: auto; /* enable both scrollbars */

border: 1px solid #ccc;

}

```

2. **SVG Sizing**

* In your D3.js script, set the SVG’s `width` and `height` attributes to match the full scrollable area (e.g. use the container’s clientWidth/clientHeight or hard-code larger dimensions such as 2000×1200).

* Ensure the SVG is appended **inside** the `#tree-container` div so that overflow works.

3. **HTML Template Edits**

* Adjust the HTML generation block in `story_scaffold.py` to include the updated CSS and the scrollable container around the D3 graph.

* Everything else (data embedding, D3 tree code, tooltips) should remain unchanged.

4. **Return**

* The full, updated `story_scaffold.py` file with these changes applied.

* Only valid, runnable Python code (no Markdown fences).

Below is your current `story_scaffold.py` HTML snippet for context—apply edits around it to enable scrolling and a larger display area:

```html

<div id="tree-container"></div>

<script>

// existing D3.js code that appends an <svg> inside #tree-container

const svg = d3.select("#tree-container")

.append("svg")

.attr("width", 600) // change this

.attr("height", 400); // and this

// ...

</script>

```

Key Takeaways

By treating prompts as first-class artifacts—and breaking big tasks into bite-sized iterations—I was able to generate, test, and refine over 600 lines of runnable Python in a couple of hours. This two-step dance between my AI consultants and Claude Code not only kept me in control of the process but also produced a “scaffold” for dissecting my manuscripts into their raw story beats. Whether you’re wrangling code or panning for narrative gold, it pays to architect your AI agentic workflow with clear separation of concerns, iterative mini-prompts, and a healthy dose of context engineering. Here’s to the next frontier: sculpting algorithmic stories as deftly as we sculpt code.