My Late Night Model Context Protocol Road Trip, On y va!

An initial Claude Desktop integration success, and the ghost in the machine...

Last time I described how I stayed up (nearly) all night starting on what will be a series of Model Context Protocol investigations — or “road trips.”

Below is my postcard from last time:

My Late Night Model Context Protocol Wolfman Jack Dedication

Thanks for reading Nate’s AI Substack! Subscribe for free to receive new posts and support my work.

I’ll keep this one brief, as this is just another interim report—another I-40 phone home moment on the road to Tucumcari. Ultimately, I hope to write something detailed and exacting when this is over, but for now… notes to self.

Good news! After some difficulty working with Claude Desktop last time, I was able to integrate the back-end Bad Science Fiction Python service into the Claude Desktop application:

Installing Claude for Desktop | Anthropic Help Center

The Model Context Protocol (MCP) GitHub site was a huge productivity boost. I used their Python SDK:

Model Context Protocol (MCP SDKs)

Note to self: While I made a lot of progress last time with my AI consultants bootstrapping up from the MCP spec, nothing beats starting with clean code examples and a working SDK!

However, I spent a lot of time refactoring the Bad Science Fiction (BSF) Server code this time. While cleaning up technical debt is worthwhile in its own right, this refactor was also necessary to ensure compatibility with the approach I planned, per the architecture below.

+------------------+ +-----------+ +-------------+

| Claude Desktop | <---> | Tool | <---> | BSF Server |

+------------------+ +-----------+ +-------------+

| | |

| HTTP Request | |

|------------------------->| |

| | API Call (REST) |

| |------------------------>|

| | |

| | Response (JSON) |

| |<------------------------|

| JavaScript | |

|<-------------------------| |

| | |

| Executes & Renders | |

|<------------------------>| |

| (UI Updates, DOM) |

| |

After discussing with my AI consultants the best refactoring pattern to use, I ended up choosing an AGSI middleware/ adapter approach based on breaking the top-level server code into three blocks and mounting the MCP (Model Context Protocol) endpoint—“app.mount("/mcp", mcp_app)” See below.

+----------------+

| Client |

+-------+--------+

|

v

+-----------------------+

| FastAPI Application |

| (Main Entry Point) |

| |

| Endpoints: |

| /health, /analyze, |

| /embed_anchor, etc. |

+-----------+-----------+

|

+------------------------+--------------------------+

| |

v v

+----------------------+ +------------------------+

| Core Analysis & | | MCP ASGI App (Mounted |

| NLP Module | | at /mcp) |

| (core_sentiment_ | | |

| server.py & Helpers) | | - Tools (e.g., echo, |

| | | ask_bad_scifi) |

| - Text Analysis | +------------------------+

| - Embeddings |

| - Visualization |

| - Caching (Redis) |

+----------------------+

|

v

+------------------------+

| External Services |

| (Redis, NLTK, etc.) |

+------------------------+

Note to self: The AI consultants helped discuss/flesh out the approach. They helped a lot by analyzing the existing code and pointing out trade-offs. I did all of the refactoring by hand, however. I thought it would be faster this way, rather than setting them up with all the context they would have needed and designing the prompts, etc.

While the BSF server is being rebuilt to support the full Model Context Protocol, I was only focused on exercising the Claude Desktop API this time.

I could integrate the BSF as a tool by working through the MCP SDK examples (from the GitHub link mentioned earlier). Once integrated, it appears on the Claude Desktop as a hammer icon (see Figure 1).

Note to self: It’s never as painless as you’d hope—but as integrations go, this one wasn’t too bad.

The tool endpoint was named “ask_bad_scifi:”

Which contains enough information to allow Claude to reason that prompts like the one below should be directed to the tool:

Ask bad scifi the following, and then display the JavaScript results.

Hello, the bad science fiction road trip. Welcome! Consider today's moment:

The storm surged, flinging rhizomes across the horizon. The knotweed heaved and howled closer, an uninvited guest growing with unnatural speed -- the ground shook and growled.

A thunder here, a thicket sprouting there – fearsome and impressive enough. Emily thought it would be another day or two before it neared. But Emily had underestimated it. The knotweed was faster—much faster—than anyone predicted. What was supposed to take days would engulf them in hours. She wasn’t ready. And her mum—out there somewhere in the desert—would be consumed if she didn’t find her soon.

The desert recoiled at the invader, disturbed by its writhing greenery. Sand, rock, dust, scorpions, and cholla bristled in protest, unwilling to trade their harsh beauty …

An essential detail in this example is that the server returns a customized JavaScript (with the data hardcoded within it) that Claude can then reason over and execute to render a result.

In today’s experiments, I expected the return output to be similar to that of Figure 2, taken from one of the BSF servers’ established visualization endpoints.

However, at the time of this writing, the code produced a curious top-level map-like visualization with scrolling story text. See Figure 3.

It wasn’t what I was aiming for, but I was intrigued—and needing sleep—so I left it as-is for now. I’ll revisit it later to understand exactly what my AI consultants did. It’s probably nothing (and I’ll ask them to correct it), but there’s a chance they stumbled onto something interesting.

Note to self: The AI consultants generated the JavaScript translation code in response to a simple prompt (something like): Take a look at this Python visualization code and generate a JavaScript version with the data hard-coded into it.

Updated/Edited 4/5 -

Got some sleep, took a dive into Figure 3, and ran several new scenarios. A few interesting findings:

If you look closely at Claude’s response in Figure 3, it comes from a scenario where the tool couldn’t connect to the BSF Python server. In this case, Claude improvised and generated the JavaScript on its own, creating a “game-like” display featuring moving green orbs that represent the knotweed from the story fragment.

In other cases where Claude couldn’t reach the BSF Python server, it didn’t simulate the story fragment. Instead, it implemented an “Analysis display,” generating various views focused on analyzing the story fragment's contents. See Figure 4 for one example.

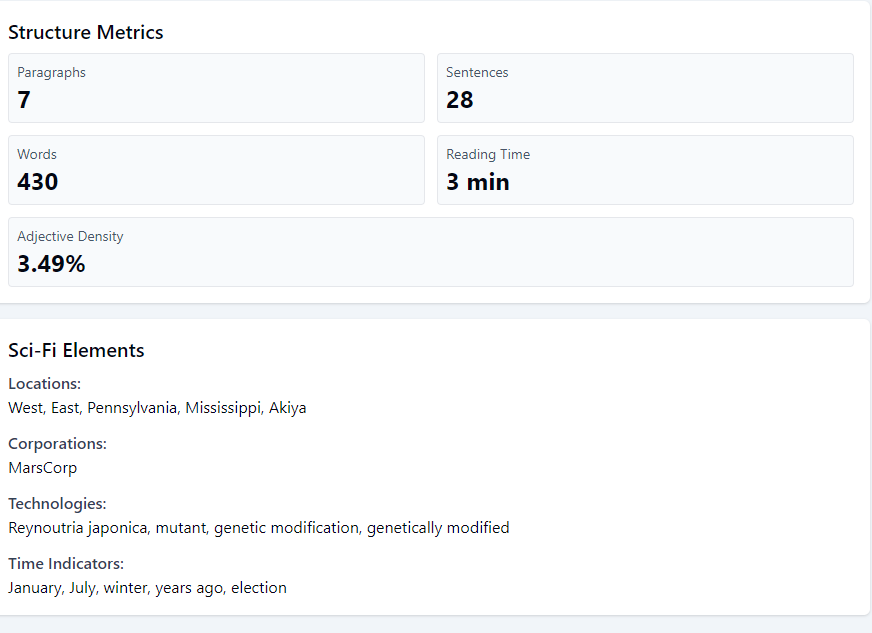

Takeways

Use SDKs and trusted/working code samples if you have them. Your AI consultants probably know them anyway, don’t ask them to reinvent the wheel.

The AI consultants didn’t contribute much actual code during today’s integration effort. It was quicker just to do it myself rather than explain it to them. That said, they were incredibly helpful during the planning phase, helping me think through what I was about to do. They were also invaluable for debugging errors and sorting out API issues.

Stay open to serendipity. Often, the AI consultants misinterpret your intent and generate something unexpected, and you will correct them. On (rare) occasions, that detour can lead to something genuinely interesting. Typically, it’s just a miss, but in the past, when I paid attention to those misfires, some of them sparked ideas I hadn’t considered.

Note to self: There are echoes with #3 regarding the current rage of “Vibe Coding” — or at least the best parts of it. It’s complicated. When I return from my road trip, I may have a few things to say about the whole subject.

What is Bad Science Fiction?

BSF, or Bad Science Fiction, describes a collaborative software project on GitHub. As explained in the AI Advances articles, the project is so named because its ostensible goal is to develop a story analysis tool specializing in science fiction—a tool to be developed in collaboration with Generative AI. However, it is as much an experiment on how best to leverage large language models (LLMs) to amplify software.