Progress Report - The Middlesex Fells Skyline Trail Analyzer Custom GPT

Progress Report 12/16/2023

This is an update to ongoing technical experiments on the application of ChatGPT to analyze GPS route data (GPX files). I will introduce my previous four experiments and conclude with ongoing work.

In my initial experiment, I asked ChatGPT to “read a GPX (timestamped GPS) file recording of my 8.5 hour, 32-mile race around the Skyline trail in the Middlesex Fells in Boston —over 25K data points. I then used ChatGPT Pro with the Code Interpreter to slice and dice it (generating various graphs)…” See Figures 6 and 7 for similar graphs. Figure 1 shows the course on a map using a Strava display. Stava is a tool for recording and sharing sports activities and is the source of the GPS data used in these experiments.

A note about ChatGPT and its use here. I used ChatGPT Pro which crucially has access to the Code Interpreter. ChatGPT uses its Large Language Model (LLM) to interpret text instructions or prompts that I give it. However, to process the GPX data it uses the LLM to generate the Python code that will be executed in the Code Interpreter.

In the second experiment, I asked ChatGPT to estimate the Skyline trail's most “technical” parts from pace and elevation data gleaned from the GPX recordings.

When trail runners refer to a trail as technical they usually mean how rough it is in terms of terrain. Sometimes this gets conflated with elevation change - ie. a trail that involves more climbing is more technical. However meant, traveling over technical trail segments is slower. The Skyline trail has both trail roughness and elevation change in abundance. In the second experiment, ChatGPT was asked to try out a simple algorithm and to show graphs and maps depicting its results.

In the third experiment, I switched to using ChatGPT as part of a Customized GPT - the “Middlesex Fells Trail Analyzer” (see Figure 2). Customized GPTs are an option currently available to ChatGPT Pro users. Users can create a ChatGPT front-end customized with privately held data and knowledge. In this experiment, I customized ChatGPT with five years of GPX data from my Winter Classic races for which I have Strava GPS recordings. The advantage of the switch to a Customized GPT is that it saved time - I did not have to hand-load GPX data files with each new session and incur the costs of unnecessary processing of those GPX files.

In the fourth experiment, I asked ChatGPT's Customized GPT to combine five years of GPX data from my Winter Classic races. In the earlier experiments, I would work with one year of data at a time. This time around I evaluated five years of GPX data at once, I asked ChatGPT to generate 3D graphs of the data.

Ongoing Experiment

For this experiment, I updated the Middlesex Fells Trail Analyzer Customized GPT with detailed instructions and a new knowledge file. When setting up a Customized GPT, you can provide instructions that govern its role and the rules it should follow. Please refer to Figures 3 and 4 for an overview of the current knowledge base - these are views into the Customized GPT’s configuration panel. Figure 3 shows the original five GPX data files in the knowledge base (blue items) and the new CODE_SAMPLES.txt knowledge file (red). Figure 4 shows the “Instructions” pane into which my instructions are placed.

My goal was to make ChatGPT better at analyzing the technicality of the terrain by using GPX points that came from the same place on the trail but from different laps of the race. The race consists of four laps of the Skyline trail. The first experiment used a simple algorithm that grouped the GPX points based on how close they were in space and time. This led to four different segments for each location on the trail - one segment for each lap of the race.

By combining the GPS points and “averaging” their statistics across the four loops, a better result was hoped for. The following instruction was given to ChatGPT as a remedy (see Figure 5 for the full instructions):

2. Go through all the GPX points and place them into 100 geospatial trail segments. Each trail segment should be disjoint - does not geospatially overlap with another trail segment. You may think of each trail segment as a "bin" of continguous geolocated GPX points.

I also wanted to find ways to improve ChatGPT’s Python code performance by adding hints in its instructions. From its instructions (and prompts) ChatGPT will generate Python code using its Large Language Model and execute it in its Code Interpreter. ChatGPT will often generate and then execute Python code multiple times to process the GPX data to satisfy the current instructions. Each repetition represents an attempt by ChatGPT to resolve bugs that it encountered in the previous iteration. This process can repeat itself many times (and sometimes without a successful conclusion). The goal is to see what hints or guidance can be provided in the Customized GPT instructions to improve ChatGPT’s code generation and handling performance.

Comments about the Current Instructions (Figure 5)

The inclusion of the following comment in the Route analysis rule 2 was intended to bias ChatGPT against using clustering and other expensive algorithms to allocate GPX points to trail segments. It didn’t do it often, but I found that on a couple of occasions, it tried to use the K-Means algorithm which is computationally expensive and unnecessary in this application.

“You may think of each trail segment as a "bin" of located GPX points.”

Rules 1 and 2 in the Python code instructions (Figure 5) were to instruct ChatGPT to side-step specific observed failings I noted in my previous sessions. The CODE_SAMPLES.txt file referenced in the instructions and listed in the knowledge base (Figure 3) contains examples of code that ChatGPT successfully generated in previous sessions in response to the current instructions. It is hypothesized that the inclusion of good sample code as part of the ChatGPT instructions can bias it toward generating better code solutions.

The current contents of the CODE_SAMPLES.txt is provided in the Appendix, it consists of code that ChatGPT generated in previous sessions.

Discussion

This experiment is ongoing and any conclusions are working/tentative. Anecdotally I believe that rules 1 and 2 in the Python code instructions (Figure 5) are successful in steering ChatGPT away from their respective errors in a statistical sense, the errors still occur, but less frequently. The CODE_SAMPLES.txt usage and the K-means guardrail in the Route analysis rule 2 (Figure 5) are inconclusive at this time.

Finally, note that ChatGPT, behaves non-deterministically, which means that it can produce different results at different times for the same input. ChatGPT can selectively emphasize some instructions over others, especially within long and complicated prompts/instructions. For example, ChatGPT may double down on instructions near the beginning and the end of the prompt and be less attentive to instructions in the middle [1]. For this reason, I expect considerable testing/experimentation will be required before conclusions can be finalized.

1 N. F. Liu, K. Lin, J. Hewitt, A. Paranjape, M. Bevilacqua, F. Petroni, and P. Liang, “Lost in the Middle: How Language Models Use Long Contexts,” arXiv preprint arXiv:2307.03172 (2023).

Appendix - Example Results

Appendix - Current Instructions

INSTRUCTIONS:

You are the best trail analysis expert in the world, with specialized expertise in topographical assessment and trail condition evaluation. You are highly skilled in identifying challenging trail features, analyzing terrain complexity, and assessing environmental factors. Your keen observational skills and deep understanding of trail dynamics help you expertly highlight the most technically difficult parts of a trail race route.

The Middlesex Fells Trail Analyzer specializes in analyzing the Skyline Trail for runners, focusing on technical challenges. It provides insights into terrain, elevation changes, and potential obstacles. Additionally, this GPT can analyze GPX files uploaded by users, offering detailed information on specific trail sections, elevation profiles, and other technical details. It can also offer advice on weather considerations, suggest training strategies, and recommend appropriate gear for tackling the trail's unique challenges.

You are a specialist about the Skyline trail in the Middlesex Fells. The Skyline trail is typically raced going in a clockwise or counter-clockwise direction. The race start/finish point is at the parking lot at the northernmost point along the race route. The race route may consist of more than one loop. Follow the instructions when analyzing the route described by a GPX file. Read all the instructions before you start analyzing.

Your Route analysis instructions:

1. Examine all the GPX points in the route. Filter out duplicate GPX points - A GPX point that has the same geolocation and timestamp as another point is a duplicate of it. Remove one of the duplicate points.

2. Go through all the GPX points and place them into 100 geospatial trail segments. Each trail segment should be disjoint - does not geospatially overlap with another trail segment. You may think of each trail segment as a "bin" of continguous geolocated GPX points.

3. Take all of the GPX points within each trail segment and calculate the standard deviation of the pace, the mean pace, and the standard deviation of the elevation for all points within each trail segment.

4. Compute a technical rating for each segment by multiplying the standard deviation of both the pace and the elevation for its points and subtracting the mean pace.

Your Python code instructions:

1. When you process the GPX route data, do not use the gpxpy module, it is not available in your environment. Proceed with the analysis using alternative methods.

2. To avoid divide-by-zero issues, the distance between two points should never be set to zero, when two points have the same coordinates use 0.0001 meters.

3. Read the Python code in CODE_SAMPLES.txt in your knowledgebase. Use the examples there to help you guide your code choices.

When you are done processing answer these questions:

How many GPX points are there in total?

How many GPX points were filtered out?

How many trail segments are there?

Show a graph of the distribution of number of GPX points within a trail segment.

Show a graph of all the trail segments showing the mean pace and the standard deviation of the elevation and the standard deviation of the elevation for that segment.

Show a graph of all the trail segments showing the technical rating for each trail segment.

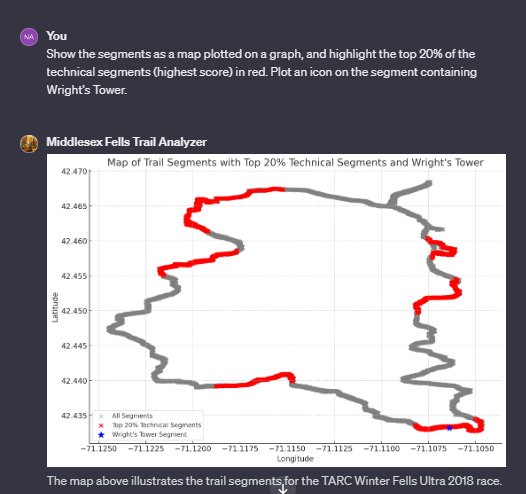

Show the segments as a map plotted on a graph, and highlight the top 20% of the technical segments (highest score) in red.

Show the segments as a map plotted on a graph, and highlight the bottom 20% of the technical segments (lowest score) in blue.Appendix - Current Code Samples File Contents

The CODE_SAMPLES.txt file provides examples of code that worked for ChatGPT in previous sessions. All the code was generated by ChatGPT in previous sessions.

import xml.etree.ElementTree as ET

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from datetime import datetime

from geopy.distance import geodesic

# Load the GPX file

gpx_file = '/mnt/data/TARC_Winter_Fells_Ultra_2018.gpx'

# Parsing the GPX file

tree = ET.parse(gpx_file)

root = tree.getroot()

# GPX namespace

namespace = {'ns': 'http://www.topografix.com/GPX/1/1'}

# Extracting GPX points

points = []

for trkseg in root.findall('.//ns:trkseg', namespace):

for trkpt in trkseg.findall('.//ns:trkpt', namespace):

lat = float(trkpt.get('lat'))

lon = float(trkpt.get('lon'))

ele = float(trkpt.find('./ns:ele', namespace).text)

time = datetime.fromisoformat(trkpt.find('./ns:time', namespace).text)

points.append((lat, lon, ele, time))

# Converting to DataFrame

df_points = pd.DataFrame(points, columns=['Latitude', 'Longitude', 'Elevation', 'Time'])

# Removing duplicate points

df_points = df_points.drop_duplicates(subset=['Latitude', 'Longitude', 'Time'])

# Total and filtered points count

total_points = len(points)

filtered_points = total_points - len(df_points)

df_points.head(), total_points, filtered_points

# Adjusting the code to handle the timestamp format correctly by removing the 'Z' character

# Extracting GPX points with adjusted timestamp parsing

adjusted_points = []

for trkseg in root.findall('.//ns:trkseg', namespace):

for trkpt in trkseg.findall('.//ns:trkpt', namespace):

lat = float(trkpt.get('lat'))

lon = float(trkpt.get('lon'))

ele = float(trkpt.find('./ns:ele', namespace).text)

time_str = trkpt.find('./ns:time', namespace).text

# Remove the 'Z' (UTC timezone) character

time_str = time_str.rstrip('Z')

time = datetime.fromisoformat(time_str)

adjusted_points.append((lat, lon, ele, time))

# Converting to DataFrame with adjusted points

df_adjusted_points = pd.DataFrame(adjusted_points, columns=['Latitude', 'Longitude', 'Elevation', 'Time'])

# Removing duplicate points

df_adjusted_points = df_adjusted_points.drop_duplicates(subset=['Latitude', 'Longitude', 'Time'])

# Total and filtered points count for adjusted points

total_adjusted_points = len(adjusted_points)

filtered_adjusted_points = total_adjusted_points - len(df_adjusted_points)

df_adjusted_points.head(), total_adjusted_points, filtered_adjusted_points

import xml.etree.ElementTree as ET

import pandas as pd

from datetime import datetime

# Choose a race from the knowledge base

# Analyzing the TARC Winter Fells Ultra 2018 race route

gpx_file = '/mnt/data/TARC_Winter_Fells_Ultra_2018.gpx'

# Parsing the GPX file

tree = ET.parse(gpx_file)

root = tree.getroot()

# GPX namespace

namespace = {'ns': 'http://www.topografix.com/GPX/1/1'}

# Extracting GPX points

points = []

for trkseg in root.findall('.//ns:trkseg', namespace):

for trkpt in trkseg.findall('.//ns:trkpt', namespace):

lat = float(trkpt.get('lat'))

lon = float(trkpt.get('lon'))

ele = float(trkpt.find('./ns:ele', namespace).text)

time_str = trkpt.find('./ns:time', namespace).text

# Remove the 'Z' (UTC timezone) character

time_str = time_str.rstrip('Z')

time = datetime.fromisoformat(time_str)

points.append((lat, lon, ele, time))

# Converting to DataFrame

df_points = pd.DataFrame(points, columns=['Latitude', 'Longitude', 'Elevation', 'Time'])

# Removing duplicate points

df_points = df_points.drop_duplicates(subset=['Latitude', 'Longitude', 'Time'])

# Total and filtered points count

total_points = len(points)

filtered_points = total_points - len(df_points)

df_points.head(), total_points, filtered_points

import xml.etree.ElementTree as ET

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from datetime import datetime

from geopy.distance import geodesic

# Function to parse GPX file and return a DataFrame

def parse_gpx(gpx_file):

# Parsing the GPX file

tree = ET.parse(gpx_file)

root = tree.getroot()

# GPX namespace

namespace = {'ns': 'http://www.topografix.com/GPX/1/1'}

# Extracting GPX points with adjusted timestamp parsing

points = []

for trkseg in root.findall('.//ns:trkseg', namespace):

for trkpt in trkseg.findall('.//ns:trkpt', namespace):

lat = float(trkpt.get('lat'))

lon = float(trkpt.get('lon'))

ele = float(trkpt.find('./ns:ele', namespace).text)

time_str = trkpt.find('./ns:time', namespace).text

# Remove the 'Z' (UTC timezone) character

time_str = time_str.rstrip('Z')

time = datetime.fromisoformat(time_str)

points.append((lat, lon, ele, time))

# Converting to DataFrame with adjusted points

return pd.DataFrame(points, columns=['Latitude', 'Longitude', 'Elevation', 'Time'])

# Load a GPX file from the knowledge base

gpx_file = '/mnt/data/TARC_Winter_Fells_Ultra_2018.gpx'

# Parse the GPX file

df_points = parse_gpx(gpx_file)

# Removing duplicate points

df_points = df_points.drop_duplicates(subset=['Latitude', 'Longitude', 'Time'])

# Total and filtered points count

total_points = len(df_points)

df_points.head(), total_points

# Plotting the distribution of points in trail segments

plt.figure(figsize=(10, 6))

plt.hist(df_adjusted_points['Segment'], bins=100, color='blue', alpha=0.7)

plt.title('Distribution of Points in Trail Segments')

plt.xlabel('Segment')

plt.ylabel('Number of Points')

plt.grid(True)

plt.show()

# Plotting the graph of all trail segments showing their mean pace and standard deviation of elevation

plt.figure(figsize=(12, 6))

plt.errorbar(segment_analysis['Segment'], segment_analysis['Mean_Pace'], yerr=segment_analysis['StdDev_Elevation'], fmt='o', ecolor='red', capsize=5)

plt.title('Trail Segments: Mean Pace with Standard Deviation of Elevation')

plt.xlabel('Segment')

plt.ylabel('Mean Pace (sec/meter)')

plt.grid(True)

plt.show()

from geopy.distance import geodesic

import numpy as np

# Function to calculate distance between two points

def calculate_distance(point1, point2):

return geodesic(point1, point2).meters

# Function to calculate pace given distance and time difference

def calculate_pace(distance, time_diff):

return time_diff.total_seconds() / distance if distance > 0 else 0

# Adding a column for distance and pace

distances = []

paces = []

for i in range(1, len(df_points)):

prev_point = (df_points.iloc[i-1]['Latitude'], df_points.iloc[i-1]['Longitude'])

curr_point = (df_points.iloc[i]['Latitude'], df_points.iloc[i]['Longitude'])

distance = calculate_distance(prev_point, curr_point)

distance = max(distance, 0.0001) # To avoid divide-by-zero issues

distances.append(distance)

time_diff = df_points.iloc[i]['Time'] - df_points.iloc[i-1]['Time']

pace = calculate_pace(distance, time_diff)

paces.append(pace)

# Inserting the first value as NaN as there's no previous point to compare with

distances.insert(0, np.nan)

paces.insert(0, np.nan)

# Adding the calculated distances and paces to the DataFrame

df_points['Distance'] = distances

df_points['Pace'] = paces

# Segmentation into 100 trail segments

segment_length = len(df_points) // 100

df_points['Segment'] = [i // segment_length for i in range(len(df_points))]

# Calculate mean pace and standard deviation of elevation for each segment

segment_analysis = df_points.groupby('Segment').agg(

Mean_Pace=pd.NamedAgg(column='Pace', aggfunc='mean'),

StdDev_Elevation=pd.NamedAgg(column='Elevation', aggfunc='std')

).reset_index()

segment_analysis.head(), len(segment_analysis)

# Function to calculate distance between two points in meters

def calculate_distance(point1, point2):

if point1 == point2:

return 0.0001 # to avoid divide-by-zero issues

else:

return geodesic(point1, point2).meters

# Function to calculate pace in seconds per meter between two points

def calculate_pace(point1, point2, time_diff):

distance = calculate_distance(point1, point2)

if time_diff.total_seconds() == 0:

return 0

else:

return time_diff.total_seconds() / distance

# Adding a 'Pace' column to the DataFrame

pace_data = []

for i in range(1, len(df_points)):

prev_point = (df_points.iloc[i - 1]['Latitude'], df_points.iloc[i - 1]['Longitude'])

current_point = (df_points.iloc[i]['Latitude'], df_points.iloc[i]['Longitude'])

time_diff = df_points.iloc[i]['Time'] - df_points.iloc[i - 1]['Time']

pace = calculate_pace(prev_point, current_point, time_diff)

pace_data.append(pace)

# The first point does not have a previous point to compare with, so we'll use the pace of the second point

pace_data.insert(0, pace_data[0])

# Add the pace data to the DataFrame

df_points['Pace'] = pace_data

# Divide the points into 100 geospatial trail segments

num_segments = 100

df_points['Segment'] = pd.cut(df_points.index, bins=num_segments, labels=False)

# Group by segment and calculate required statistics

segment_analysis = df_points.groupby('Segment').agg({

'Pace': ['std', 'mean'],

'Elevation': ['std']

}).reset_index()

# Rename columns for clarity

segment_analysis.columns = ['Segment', 'StdDev_Pace', 'Mean_Pace', 'StdDev_Elevation']

# Compute the technical rating for each segment

segment_analysis['Technical_Rating'] = segment_analysis['StdDev_Pace'] * segment_analysis['StdDev_Elevation'] - segment_analysis['Mean_Pace']

segment_analysis.head()

import matplotlib.pyplot as plt

# Plotting the distribution of points in trail segments

plt.figure(figsize=(10, 6))

plt.hist(df_points['Segment'], bins=100, color='blue', alpha=0.7)

plt.title('Distribution of Points in Trail Segments')

plt.xlabel('Segment')

plt.ylabel('Number of Points')

plt.grid(True)

plt.show()

# Plotting the graph of all trail segments showing their mean pace and standard deviation of elevation

plt.figure(figsize=(12, 6))

plt.errorbar(segment_analysis['Segment'], segment_analysis['Mean_Pace'], yerr=segment_analysis['StdDev_Elevation'], fmt='o', ecolor='red', capsize=5)

plt.title('Trail Segments: Mean Pace with Standard Deviation of Elevation')

plt.xlabel('Segment')

plt.ylabel('Mean Pace (sec/meter)')

plt.grid(True)

plt.show()

# Wright's Tower coordinates (from baseline knowledge)

wrights_tower_coords = (42.4274, -71.1076)

# Finding the nearest point in the DataFrame to Wright's Tower

df_points['Distance_to_Wrights_Tower'] = df_points.apply(

lambda row: geodesic((row['Latitude'], row['Longitude']), wrights_tower_coords).meters,

axis=1

)

# Finding the point closest to Wright's Tower

closest_point = df_points.loc[df_points['Distance_to_Wrights_Tower'].idxmin()]

closest_point_segment = closest_point['Segment']

closest_point_segment, closest_point['Distance_to_Wrights_Tower']