The Milagro Beanfield War and my Late Night Model Context Protocol Road Trip. Is Claude too Earnest?

Integrating the Bad Science Fiction service into the Claude Desktop was the easy part. And now the hard part -- Claude is very eager.

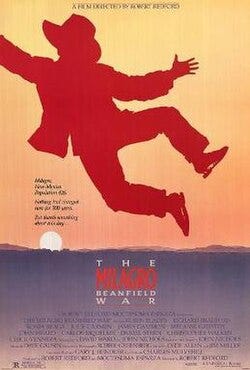

When I was in college, as part of an interpretive theatre group, I had a small part playing Charlie Bloom in a Communication Department’s seminar interpretation of John Nichols’ novel The Milagro Beanfield War. More recently, I visited Robert Redford’s film interpretation out of nostalgia.

I liked the film very much, though some critics were unhappy with its interpretation (something about mixing mythical and “real” themes). In contrast, I was not thrilled about my performance. I thought that I overinterpreted my role.

As I continue what will be a series of Model Context Protocol investigations — or “road trips,” I muse on Claude’s role in my current experiment.

Below is my postcard from last time. In the footnote, I asked Claude for a one—paragraph summary in case you don’t get to the postcard.1

Enter Claude…

My Late Night Model Context Protocol Road Trip, On y va!

Last time I described how I stayed up (nearly) all night starting on what will be a series of Model Context Protocol investigations — or “road trips.”

Claude

Building on the progress outlined in my previous postcard(s), I’ve sorted out the remaining glitches—mostly related to return data formatting—and Claude Desktop is now eager to assist with Bad Science Fiction. In some respects, it’s doing exactly what I expect. In others, it’s not there (yet).

The components of the system, and their relationships to one another, are roughly as follows:

The test prompt I’ve been using with Claude (some variation thereof):

Ask bad science fiction the following. Bad Science Fiction will return a JavaScript result. Execute the returned JavaScript and display the result.

Hello, the bad science fiction road trip. Welcome! Consider today's moment:

The storm surged, flinging rhizomes across the horizon. The knotweed heaved and howled closer, an uninvited guest growing with unnatural speed -- the ground shook and growled.

A thunder here, a thicket sprouting there – fearsome and impressive enough. Emily thought it would be another day or two before it neared. But Emily had underestimated it. The knotweed was faster—much faster—than anyone predicted. What was supposed to take days would engulf them in hours. She wasn’t ready. And her mum—out there somewhere in the desert—would be consumed if she didn’t find her soon.

...(plus another several paragraphs)When I started, I asked my AI consultants to generate a JavaScript template that the BSF server would return to Claude (via the tool) based on existing Python endpoints. I expected something like the following (Figure 1):

However, the AI consultants generated a presentation template, as shown in Figure 2.

This isn't a problem for the current leg of the road trip. Once I figure out what I want, I can easily have my AI consultants change it.

That said, the issue of interpretation is front and center. In my experience with Bad Science Fiction, most of the reasoning mistakes AI consultants make aren’t due to mistakes per se—they come from misinterpreting the user's intent.

I’m pretty confident that if I revisited the original prompt and the associated code, I could pinpoint exactly where things went sideways and how the AI misunderstood what I was trying to do.

AI is often (too) eager. Claude is very eager too. A few examples from this road trip:

Interpreting Under A Great Deal of Pressure

Early on, when the tool had connectivity problems with the server, Claude would do its best to interpret the prompt (above) and gamely fill in the void. My favorite is Claude's game-like animated JavaScript simulation of advancing knotweed (from a story theme). See Figure 3.

It was impressive, but not what I intended. Some mighty fine tap dancing, I thought.

At other times, when Claude was confused by failed connectivity to the BSF server, it would fall back on generating JavaScript for a fairly standard word analysis, which was at least somewhat useful. See Figure 4.

Gentle Interpretation

Once Claude Desktop and the Bad Science Fiction server resolved their data format differences and began working together smoothly, the template shown in Figure 2 would display consistently. Nonetheless, Claude continued to interpret the incoming JavaScript (Figure 2), appending its own suggestions at the end. Figures 5 and 6 show a couple of those suggestions.

Takeaways

If you choose to use a large language model (like Claude Desktop) as a front-end client to a back-end web service, you must justify the AI’s role in the client versus simply using a browser.

A good justification is to leverage the interpretive abilities of an LLM. But this requires careful consideration. You need to identify the situations where it can truly add value—and find ways to guide it toward productive uses.

AI really, really wants to help. But you don’t want it to try too hard.

What is Bad Science Fiction?

BSF, or Bad Science Fiction, describes a collaborative software project on GitHub. As explained in the AI Advances articles, the project is so named because its ostensible goal is to develop a story analysis tool specializing in science fiction—a tool to be developed in collaboration with Generative AI. However, it is as much an experiment on how best to leverage large language models (LLMs) to amplify software.

The author details their progress integrating a "Bad Science Fiction" (BSF) Python service with Claude Desktop using the Model Context Protocol (MCP). After refactoring the BSF server code using an ASGI middleware/adapter approach to mount the MCP endpoint, they successfully implemented the service as a tool in Claude Desktop. While testing, they expected visualization output similar to earlier sentiment analysis, but encountered unexpected results—Claude generated improvised JavaScript visualizations when it couldn't connect to the BSF server, including animated knotweed representations and analytical displays of story fragments. The author notes three key takeaways: leveraging existing SDKs saves time, AI consultants were more valuable for planning and debugging than coding, and unexpected AI outputs occasionally lead to interesting discoveries—drawing parallels to "Vibe Coding."