Towards Automating a GitHub Workflow to Generate Architecture Diagrams

Pipeline Dreams: GitHub Actions and Generative AI

Overview

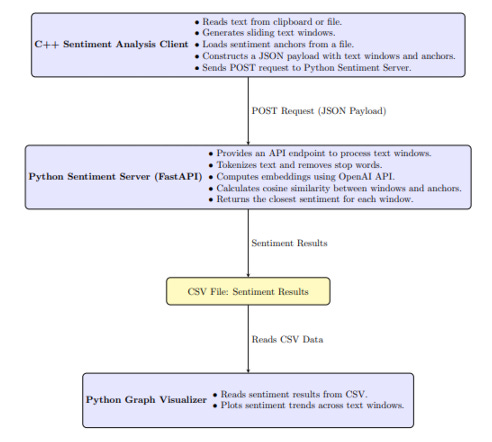

Wouldn’t it be great if I could auto-generate the architectural diagrams, such as Figure 2, each time I push new code into my repository?

Initially, I created these figures by hand in PowerPoint and collaborated with ChatGPT to refine the text bullet points. Later, ChatGPT handled most of the work by generating the LaTeX from my posts directly, which I manually entered into Overleaf.com for rendering.

Going forward, I would like to automate this process so that architecture figures are generated as a side effect of the build process and automatically sent to a “Management” repository. There, they would be further processed into other metadata—such as software changes over time—for use in changelogs and other tracking.

The Story

Last weekend, I spent about nine hours on the trail in a race. After a shower and a good night's sleep, I concluded I needed to do more of Figure 1 (training).

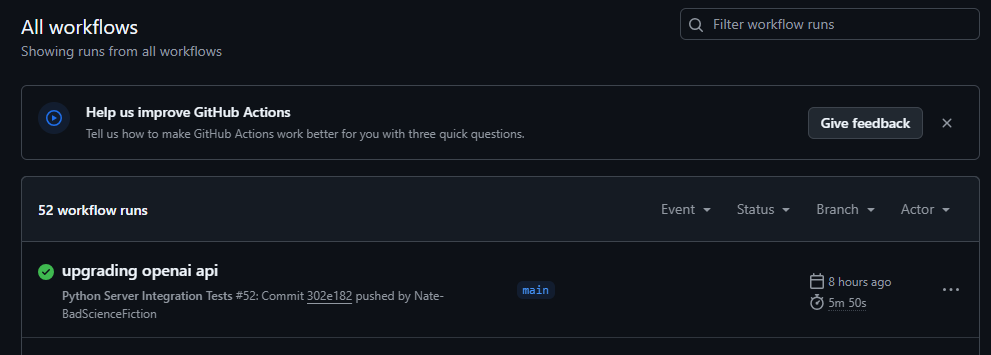

This weekend, I spent more time than that setting up a dual GitHub repository and workflow (Actions pipeline) system for the Bad Science Fiction project. Using generative AI, the setup will automatically generate architecture diagrams like those in Figure 2.

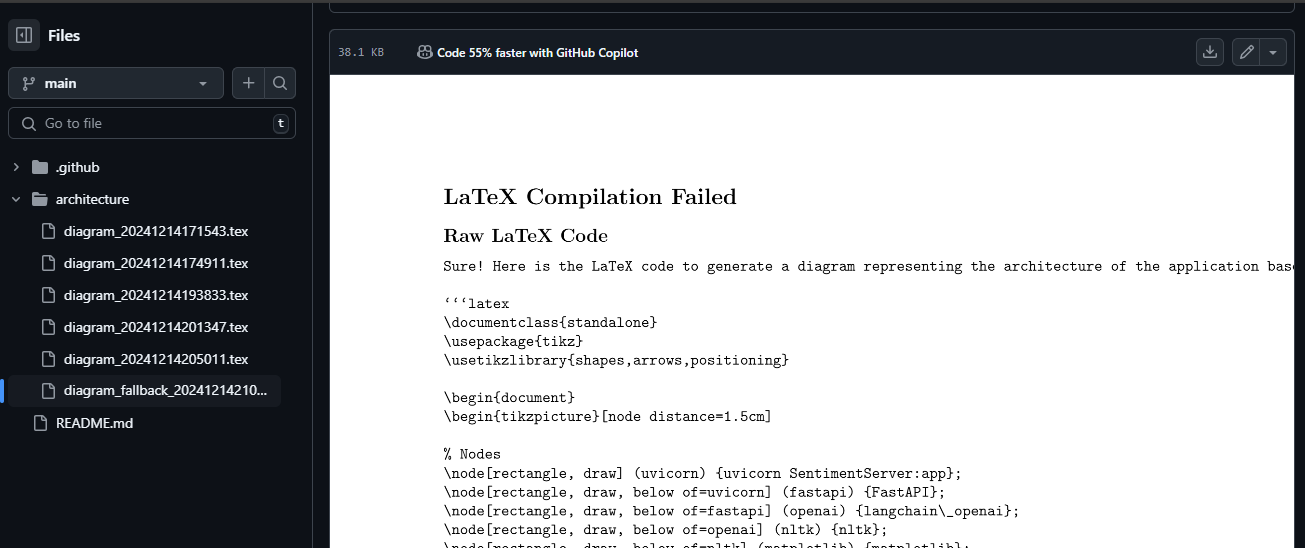

It’s not done, but it’s close. It now generates a failed LaTeX compilation PDF report instead of something like Figure 2. It's a good place to take a step back and take a breather.

What have I concluded?

What is Bad Science Fiction?

BSF, or Bad Science Fiction, describes a collaborative software project on GitHub. As explained in the AI Advances articles, the project is so named because its ostensible goal is to develop a story analysis tool specializing in science fiction—a tool to be developed in collaboration with Generative AI. However, it is as much an experiment on how best to leverage large language models (LLMs) to amplify software development productivity.

What is Going on Here?

Figure 2 depicts the architecture of the Bad Science Fiction application from a few weeks ago. The application is hosted on a GitHub repository — Bad-Science-Fiction-Anchor-Tool, which already uses a workflow of GitHub Actions to test and style-check its contents.

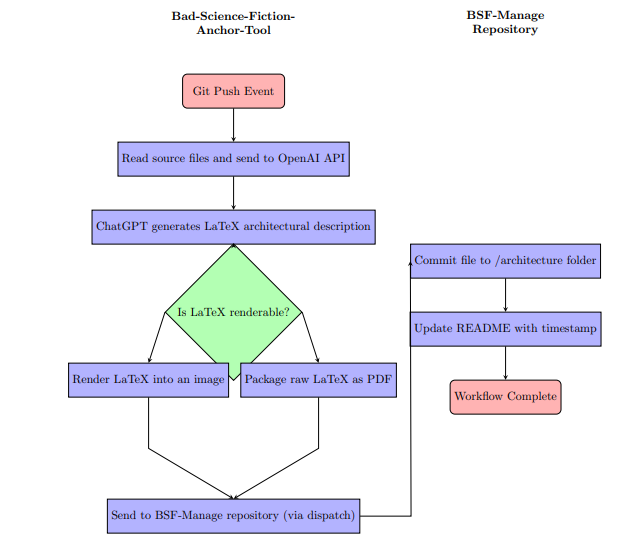

This post concerns enhancing this workflow by integrating ChatGPT to automatically generate LaTeX architectural descriptions of the application in response to git push events.

Once generated in the Bad-Science-Fiction-Anchor-Tool pipeline, these documents are sent to the BSF-Manage repository for post-processing, version control, and final storage.

A few details:

Bad-Science-Fiction-Anchor-Tool Actions:

Source files are read from the repository and sent to ChatGPT using the OpenAI API. ChatGPT is prompted to generate a LaTeX representation of the application architecture.

OpenAI API is called by a GitHub Action.

The returned LaTeX is currently rendered into an image and sent to the BSF-Manage repository via a GitHub

repository dispatchtrigger.If the LaTeX output from ChatGPT cannot be rendered, it is packaged in its raw form as a PDF document and sent as an alternative to the rendered image — for example, see Figure 3.

BSF-Manage Actions:

Incoming PDF or PNG image (rendered LaTeX) architectural descriptions are committed into an /architecture folder.

A top-level repository, Readme.md, is updated with the timestamp of the latest delivery from the Bad-Science-Fiction-Anchor-Tool.

These details are captured in Figure 4.

The flow described in Figure 4 is functional. However, the automated LaTeX components of the workflow are still under development, as evidenced by the results shown in Figure 3. The long-term purpose of the BSF-Manage repository is to save project metadata obtained from automated sources, such as changelogs and software/architecture descriptions.

How was the Quality of the Generative AI Collaboration?

This task took about double the time I spent on the previous weekend’s trail race (so far). It represents the most time-consuming task performed for the BSF project to date. Generative AI continued to play an integral role, although its centrality here was diminished compared to earlier tasks.

I used the ChatGPT models o1, o1-mini, and 4o throughout the process. These generative AI models proved valuable for refining my initial design, exploring key technical details, and drafting script templates. However, when troubleshooting and resolving the more time-consuming issues, I relied more on traditional resources like Stack Overflow and other online sources.

Some highlights:

The kick-off prompt was straightforward. ChatGPT o1’s response was thorough (see Appendix 1), serving as a useful top-level road map for this task.

Do the following:

1.) Update the following github actions workflow script (python-tests.yml) to read the source code in the repository and ask ChatGPT to generate a LaTeX diagram of its application architecture. It should then send the LaTeX using repository_dispatch to an external BSF-Manage repository.

2.) Generate the python-tests.yml for the BSF-Manage repository such that when it receives a LaTeX diagram from the Bad-Science-Fiction-Anchor-Tool repository workflow, it will copy it into an "architecture" subdirectory in the repository as well as list its entry (by timestamp) in the Readme.md file.

3.) Describe the steps to implement 1.) and 2.) in GitHub.

===

Source Code:

Bad-Science-Fiction-Anchor-Tool python-tests.yml:

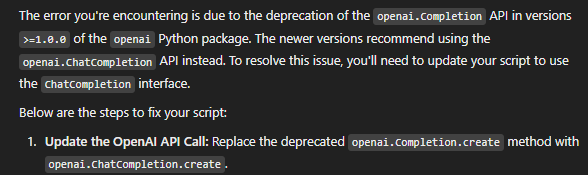

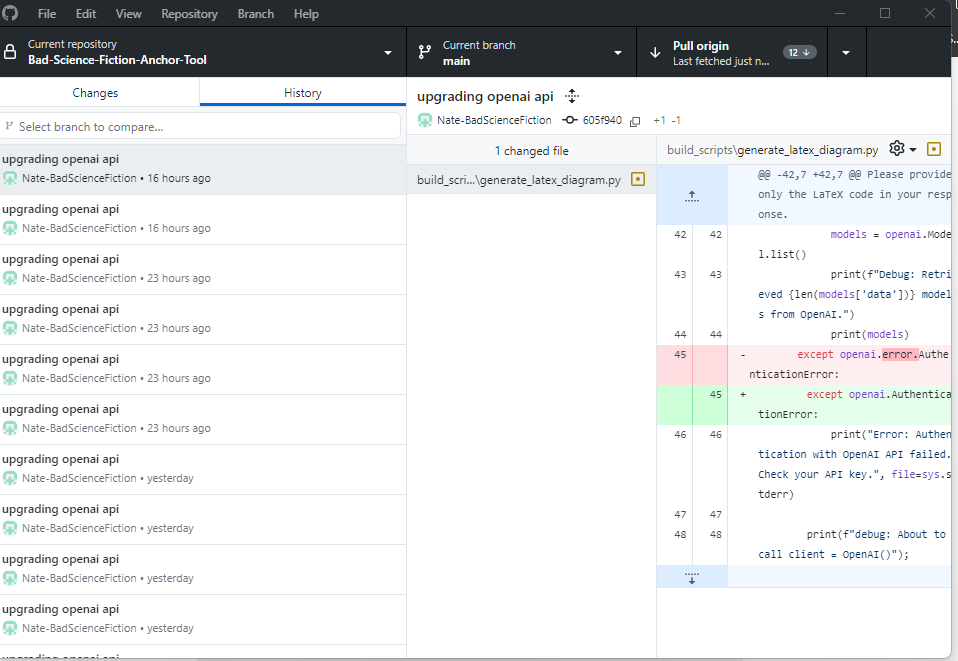

(code removed for readability)Initially, much time was spent troubleshooting conflicts in my OpenAI API usage in the

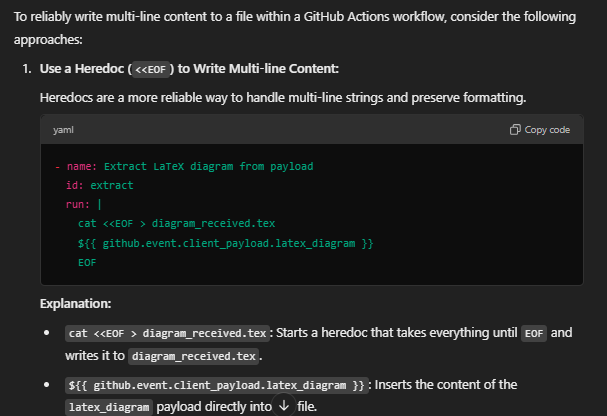

python-tests.ymlscript and with the OpenAI API module installed in the GitHub pipeline environment. Figure 5 is an example of the feedback I would receive from ChatGPT in response to these pipeline errors.

I struggled with bizarre connectivity issues that were ultimately caused by extra whitespace added to my GitHub secrets—

OPENAI_API_KEYandREPO_DISPATCH_TOKEN—during cut-and-paste. These errors were on me! Although annoyed, I admired how dogged ChatGPT was with its generic checklists—even though it had no idea of the root cause of my issue. One checklist item suggested resetting the keys, which resolved the problem.

Ultimately, I spent significant time debugging YAML incompatibilities with shell commands and various strings or character sequences. Figure 6 highlights some of the banter with ChatGPT. Its advice on these issues was often close — but not close enough. I avoided many of these problems caused by transferring text between workflows by moving PDFs and PNGs instead. However, I will need to revisit this in the future.

Discussion

ChatGPT was most useful in the initial stages, helping to lay out the approach, create starter designs, and generate script templates. However, it became less helpful when resolving specific implementation details—for example, deciding which code sample, based on what OpenAI API version, would be best for my pipeline environment.

Ultimately, debugging can involve addressing highly specific problems that don't fit easily into a more general knowledge. For instance, what version are you working with? How will you fit that character sequence into this YAML file at that location in the script? These precision problems can be particularly challenging for generative AI models if there isn’t enough context and if they haven’t encountered enough similar examples. This makes it harder for the models to recognize and distinguish your specific issue from similar but different ones. I think highlights 2 and 4 mentioned earlier fall into this category.

Nonetheless, keeping generative AI in the loop is worthwhile—even if they don't answer your question exactly. I found ChatGPT useful, even with issues I resolved the old-fashioned way using the internet. For example, ChatGPT helped me quickly generate debug code snippets and provided checklists and examples that were a source of ideas and second opinions.

Appendix 1

ChatGPT o1’s original response in full.

Certainly! Below is the updated GitHub Actions workflow script that includes steps to generate a LaTeX architecture diagram using ChatGPT and compile it into a PDF. This involves adding steps to:

1. **Install LaTeX**: Necessary for compiling LaTeX documents.

2. **Generate the LaTeX Diagram**: A Python script interacts with the OpenAI API to generate LaTeX code based on your source code.

3. **Compile LaTeX to PDF**: Converts the generated `.tex` file into a PDF.

4. **Upload the PDF as an Artifact**: Makes the generated PDF available for download after the workflow runs.

### Updated Workflow Script

```yaml

name: Python Server Integration Tests

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9' # Specify your Python version

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

pip install flake8 black

pip install mypy

pip install requests openai # Added 'openai' for API interaction

- name: Set up NLTK data

run: |

python -m nltk.downloader punkt

python -m nltk.downloader punkt_tab

- name: Create openapi.key file

run: |

echo "${{ secrets.OPENAPI_KEY }}" > openapi.key

- name: Run flake8

run: flake8 .

continue-on-error: true # Prevents the workflow from failing

- name: Run black (Check mode)

run: black --check .

continue-on-error: true # Prevents the workflow from failing

- name: Run mypy

run: mypy .

continue-on-error: true # Prevents the workflow from failing

- name: Run Unit Tests

env:

OPENAI_API_KEY: ${{ secrets.OPENAPI_KEY }}

run: |

# Navigate to the tests directory if necessary

cd BSF_PythonUnitTests

# Run the tests using unittest

python -m unittest discover -s . -p 'BSF_PythonUnitTest*.py'

# New Steps Start Here

- name: Install LaTeX

run: |

sudo apt-get update

sudo apt-get install -y texlive-full

- name: Generate LaTeX Architecture Diagram

env:

OPENAI_API_KEY: ${{ secrets.OPENAPI_KEY }}

run: |

# Ensure 'openai' is installed

pip install openai

# Create a Python script to generate the LaTeX diagram

cat <<'EOF' > generate_diagram.py

import os

import openai

def read_source_code(directory='.'):

source_files = []

for root, dirs, files in os.walk(directory):

for file in files:

if file.endswith('.py'):

with open(os.path.join(root, file), 'r') as f:

source_files.append(f.read())

return '\n'.join(source_files)

def generate_latex_diagram(source_code, api_key):

openai.api_key = api_key

prompt = f"""

Generate a LaTeX diagram representing the architecture of the following Python server application. Focus on the main components, their interactions, and data flow. Use the source code below as reference:

{source_code}

Provide the LaTeX code using the TikZ package suitable for compiling into a PDF.

"""

try:

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are an assistant that generates LaTeX diagrams based on source code."},

{"role": "user", "content": prompt}

],

temperature=0.3,

max_tokens=1500,

)

latex_code = response['choices'][0]['message']['content']

return latex_code

except Exception as e:

print(f"Error generating LaTeX diagram: {e}")

return None

def main():

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

print("OPENAI_API_KEY not set.")

return

source_code = read_source_code()

latex_code = generate_latex_diagram(source_code, api_key)

if latex_code:

with open("architecture_diagram.tex", "w") as f:

f.write(latex_code)

print("LaTeX diagram generated successfully.")

else:

print("Failed to generate LaTeX diagram.")

if __name__ == "__main__":

main()

EOF

# Run the Python script to generate the LaTeX diagram

python generate_diagram.py

- name: Compile LaTeX to PDF

run: |

pdflatex architecture_diagram.tex

- name: Upload Architecture Diagram PDF

if: success()

uses: actions/upload-artifact@v3

with:

name: architecture_diagram

path: architecture_diagram.pdf

```

### Detailed Explanation of Added Steps

1. **Install LaTeX**

LaTeX is required to compile the `.tex` file into a PDF. The `texlive-full` package is comprehensive, ensuring all necessary packages are available.

```yaml

- name: Install LaTeX

run: |

sudo apt-get update

sudo apt-get install -y texlive-full

```

2. **Generate LaTeX Architecture Diagram**

This step involves creating and running a Python script that interacts with the OpenAI API to generate the LaTeX code for the architecture diagram based on your repository's source code.

```yaml

- name: Generate LaTeX Architecture Diagram

env:

OPENAI_API_KEY: ${{ secrets.OPENAPI_KEY }}

run: |

# Ensure 'openai' is installed

pip install openai

# Create a Python script to generate the LaTeX diagram

cat <<'EOF' > generate_diagram.py

import os

import openai

def read_source_code(directory='.'):

source_files = []

for root, dirs, files in os.walk(directory):

for file in files:

if file.endswith('.py'):

with open(os.path.join(root, file), 'r') as f:

source_files.append(f.read())

return '\n'.join(source_files)

def generate_latex_diagram(source_code, api_key):

openai.api_key = api_key

prompt = f"""

Generate a LaTeX diagram representing the architecture of the following Python server application. Focus on the main components, their interactions, and data flow. Use the source code below as reference:

{source_code}

Provide the LaTeX code using the TikZ package suitable for compiling into a PDF.

"""

try:

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are an assistant that generates LaTeX diagrams based on source code."},

{"role": "user", "content": prompt}

],

temperature=0.3,

max_tokens=1500,

)

latex_code = response['choices'][0]['message']['content']

return latex_code

except Exception as e:

print(f"Error generating LaTeX diagram: {e}")

return None

def main():

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

print("OPENAI_API_KEY not set.")

return

source_code = read_source_code()

latex_code = generate_latex_diagram(source_code, api_key)

if latex_code:

with open("architecture_diagram.tex", "w") as f:

f.write(latex_code)

print("LaTeX diagram generated successfully.")

else:

print("Failed to generate LaTeX diagram.")

if __name__ == "__main__":

main()

EOF

# Run the Python script to generate the LaTeX diagram

python generate_diagram.py

```

**Script Breakdown:**

- **Reading Source Code**: The `read_source_code` function traverses the repository and concatenates all Python files' contents.

- **Generating LaTeX Code**: The `generate_latex_diagram` function sends the source code to the OpenAI API with a prompt to generate a TikZ-based LaTeX diagram.

- **Handling API Response**: The generated LaTeX code is written to `architecture_diagram.tex`.

- **Error Handling**: Basic error handling is included to catch issues with the API call.

3. **Compile LaTeX to PDF**

This step compiles the generated `.tex` file into a PDF using `pdflatex`.

```yaml

- name: Compile LaTeX to PDF

run: |

pdflatex architecture_diagram.tex

```

4. **Upload Architecture Diagram PDF**

After successful compilation, the PDF is uploaded as an artifact, making it available for download from the workflow run.

```yaml

- name: Upload Architecture Diagram PDF

if: success()

uses: actions/upload-artifact@v3

with:

name: architecture_diagram

path: architecture_diagram.pdf

```

### Additional Considerations

- **API Rate Limits and Costs**: Interacting with the OpenAI API consumes tokens and may incur costs. Ensure your usage stays within your OpenAI plan's limits.

- **Repository Size**: For large repositories, sending all source code to the API might exceed token limits or lead to long processing times. Consider modifying the `read_source_code` function to include only relevant parts or summarize the architecture before sending.

- **Security**: Ensure that no sensitive information is present in the source code that gets sent to the OpenAI API, especially if your repository is public.

- **LaTeX Compilation Time**: Installing `texlive-full` can be time-consuming. If build times are a concern, consider installing a minimal LaTeX distribution or using caching strategies.

- **Error Handling Enhancements**: The current script has basic error handling. Depending on your needs, you might want to add more robust checks and fallback mechanisms.

- **Customization of the Prompt**: Adjust the prompt in the Python script to better suit the specifics of your architecture or the level of detail you require in the diagram.

### Final Workflow Overview

1. **Checkout and Setup**: The workflow checks out the repository, sets up Python, and installs dependencies.

2. **Code Quality and Testing**: Runs linters (`flake8`, `black`), type checks (`mypy`), and unit tests.

3. **LaTeX Installation**: Installs the LaTeX environment needed for compiling the diagram.

4. **Diagram Generation**: Uses a Python script to interact with ChatGPT, generating a LaTeX diagram based on the source code.

5. **Compilation and Upload**: Compiles the LaTeX code into a PDF and uploads it as an artifact for review.

This comprehensive workflow ensures that every push or pull request to the `main` branch not only runs your tests and code quality checks but also generates an up-to-date architecture diagram of your Python server application.Appendix 2

Example. Bad-Science-Fiction-Anchor-Tool repository python-tests.yml

name: Python Server Integration Tests

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

pip install flake8 black mypy

- name: Set up NLTK data

run: |

python -m nltk.downloader punkt

python -m nltk.downloader punkt_tab

- name: Create openapi.key file

run: |

echo "${{ secrets.OPENAPI_KEY }}" > openapi.key

- name: Run flake8

run: flake8 .

continue-on-error: true

- name: Run black (Check mode)

run: black --check .

continue-on-error: true

- name: Run mypy

run: mypy .

continue-on-error: true

- name: Run Unit Tests

env:

OPENAI_API_KEY: ${{ secrets.OPENAPI_KEY }}

run: |

cd BSF_PythonUnitTests

python -m unittest discover -s . -p 'BSF_PythonUnitTest*.py'

# New steps to generate and send LaTeX diagram as an image or fallback PDF

- name: Gather code structure

id: code_structure

run: |

# Example: Collect code into a file for reference

find . -type f \( -name "SentimentAnchors.cpp" -o -name "BSF-WindowsAnchorClient.cpp" -o -name "SentimentServer.py" \) -exec cat {} + > code_structure.json

- name: Install OpenAI Python Package

run: |

pip install openai

- name: Generate LaTeX diagram using ChatGPT

id: generate_latex

env:

OPENAI_API_KEY: ${{ secrets.OPENAPI_KEY }}

run: |

# This script should produce architecture_diagram.tex

python ./build_scripts/generate_latex_diagram.py

- name: Install LaTeX and ImageMagick

run: |

sudo apt-get update

sudo apt-get install -y texlive texlive-latex-extra imagemagick

- name: Compile LaTeX to PDF

id: compile_latex

run: |

pdflatex -interaction=nonstopmode architecture_diagram.tex

continue-on-error: true

- name: Check LaTeX Compilation Status

id: check_compile

run: echo "COMPILE_STATUS=${{ steps.compile_latex.outcome }}" >> $GITHUB_ENV

- name: Convert PDF to PNG (If Compilation Succeeded)

if: env.COMPILE_STATUS == 'success'

run: |

convert -density 300 architecture_diagram.pdf -quality 90 architecture_diagram.png

- name: Encode PNG to Base64 (If Compilation Succeeded)

if: env.COMPILE_STATUS == 'success'

run: |

BASE64_IMAGE=$(base64 -w 0 architecture_diagram.png)

echo "BASE64_IMAGE=${BASE64_IMAGE}" >> $GITHUB_ENV

- name: Generate Fallback PDF with Raw LaTeX Code (If Compilation Failed)

if: env.COMPILE_STATUS != 'success'

run: |

echo "\documentclass{article}

\usepackage{verbatim}

\usepackage[margin=1in]{geometry}

\begin{document}

\section*{LaTeX Compilation Failed}

\subsection*{Raw LaTeX Code}

\begin{verbatim}

$(cat architecture_diagram.tex)

\end{verbatim}

\end{document}" > fallback.tex

pdflatex -interaction=nonstopmode fallback.tex

- name: Encode Fallback PDF to Base64 (If Compilation Failed)

if: env.COMPILE_STATUS != 'success'

run: |

BASE64_PDF=$(base64 -w 0 fallback.pdf)

echo "BASE64_PDF=${BASE64_PDF}" >> $GITHUB_ENV

- name: Dispatch Event to BSF-Manage Repository

env:

REPO_DISPATCH_TOKEN: ${{ secrets.REPO_DISPATCH_TOKEN }} # PAT or GITHUB_TOKEN with appropriate permissions

run: |

OWNER="..."

REPO="BSF-Manage"

EVENT_TYPE="latex_diagram_generated"

if [ "${COMPILE_STATUS}" == "success" ]; then

# Ensure the image size is within acceptable limits (e.g., <7MB after Base64 encoding)

IMAGE_SIZE=$(echo $BASE64_IMAGE | wc -c)

if [ "$IMAGE_SIZE" -gt 7000000 ]; then

echo "Encoded image size ($IMAGE_SIZE bytes) exceeds the 7MB limit."

exit 1

fi

PAYLOAD="{\"event_type\":\"$EVENT_TYPE\", \"client_payload\":{\"latex_image\":\"$BASE64_IMAGE\"}}"

else

# Ensure the PDF size is within acceptable limits (e.g., <7MB after Base64 encoding)

PDF_SIZE=$(echo $BASE64_PDF | wc -c)

if [ "$PDF_SIZE" -gt 7000000 ]; then

echo "Encoded PDF size ($PDF_SIZE bytes) exceeds the 7MB limit."

exit 1

fi

PAYLOAD="{\"event_type\":\"$EVENT_TYPE\", \"client_payload\":{\"latex_pdf\":\"$BASE64_PDF\"}}"

fi

RESPONSE=$(curl -s -o response.json -w "%{http_code}" -X POST \

-H "Accept: application/vnd.github+json" \

-H "Authorization: Bearer $REPO_DISPATCH_TOKEN" \

https://api.github.com/repos/$OWNER/$REPO/dispatches \

-d "$PAYLOAD")

if [ "$RESPONSE" -ne 204 ]; then

echo "Failed to dispatch event. HTTP Status Code: $RESPONSE"

cat response.json

exit 1

else

echo "Successfully dispatched event to $OWNER/$REPO"

fi

Appendix 3

Example. BSF-Manage repository python-tests.yml

name: Handle LaTeX Diagram Dispatch

on:

repository_dispatch:

types: [latex_diagram_generated]

permissions:

contents: write # Ensure the workflow has write permissions

jobs:

handle_latex_diagram:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Create architecture directory if not present

run: mkdir -p architecture

- name: Extract LaTeX Image from Payload (If Present)

if: github.event.client_payload.latex_image != ''

id: extract_image

run: |

# Decode the Base64 image and save as PNG

echo "${{ github.event.client_payload.latex_image }}" | base64 -d > architecture_diagram.png

- name: Extract Fallback PDF from Payload (If Present)

if: github.event.client_payload.latex_pdf != ''

id: extract_pdf

run: |

# Decode the Base64 PDF and save as PDF

echo "${{ github.event.client_payload.latex_pdf }}" | base64 -d > architecture_fallback.pdf

- name: Verify LaTeX Image

if: github.event.client_payload.latex_image != ''

run: |

ls -l architecture/

file architecture_diagram.png

identify architecture_diagram.png || echo "ImageMagick not installed."

- name: Verify Fallback PDF

if: github.event.client_payload.latex_pdf != ''

run: |

ls -l architecture/

file architecture_fallback.pdf

# Optionally, check PDF integrity

pdftotext architecture_fallback.pdf - | grep "LaTeX Compilation Failed" || echo "Fallback PDF does not contain expected content."

- name: Timestamp and Move Diagram Image

if: github.event.client_payload.latex_image != ''

run: |

TIMESTAMP=$(date +%Y%m%d%H%M%S)

mv architecture_diagram.png architecture/diagram_$TIMESTAMP.png

echo "FILE=architecture/diagram_$TIMESTAMP.png" >> $GITHUB_ENV

- name: Timestamp and Move Fallback PDF

if: github.event.client_payload.latex_pdf != ''

run: |

TIMESTAMP=$(date +%Y%m%d%H%M%S)

mv architecture_fallback.pdf architecture/diagram_fallback_$TIMESTAMP.pdf

echo "FILE=architecture/diagram_fallback_$TIMESTAMP.pdf" >> $GITHUB_ENV

- name: Update README with Diagram Image

if: github.event.client_payload.latex_image != ''

run: |

FILE_NAME=$(echo $FILE)

echo "" >> README.md

echo "* Diagram received at $(date): $FILE_NAME" >> README.md

- name: Update README with Fallback PDF Link

if: github.event.client_payload.latex_pdf != ''

run: |

FILE_NAME=$(echo $FILE)

echo "[LaTeX Compilation Failed - View Raw LaTeX Code](architecture/$FILE_NAME)" >> README.md

echo "* Fallback PDF received at $(date): $FILE_NAME" >> README.md

- name: Commit and Push Changes

run: |

git config user.name "github-actions[bot]"

git config user.email "github-actions[bot]@users.noreply.github.com"

git add architecture/*

git add README.md

git commit -m "Add architecture diagram received at $(date)"

git push