AI Context: When More Isn't Always Better

Why automation doesn't mean you can stop curating—

I spend a lot of time thinking about context with LLMs—it’s always been that way. For a clear primer, see Addy Osmani’s Context Engineering: Bringing Engineering Discipline to Prompts. Agentic models haven’t changed the role of context. They automate plenty and even streamline management—Claude Code constantly nudges me to /compact and offers helpers like /clear and /context.

You can even let it do this automatically—but there’s a risk: if you stop curating, the model’s context can drift. Sometimes that’s fine—especially when it’s grinding on problems that don’t need much context—but it’s still something you need to watch.

But streamlining isn’t the same as absolution; I still have to manage it. I like to analogize managing context to garbage collection in programming languages. There are differences—for starters, LLM context is far less determinate—you may not easily compute, in the moment, what should stay and what should go, because, well, it’s often hard to predict what will be useful going forward.

I follow a number of people who, like myself, are building software using Claude Code or other LLMs, and there is always mention of “context rot.” The common usage seems to be more in line with where a lot of context becomes noise — more of a distraction to the LLM. Consider this example from recent research:

We demonstrate that… model performance degrades as input length increases, often in surprising and non-uniform ways. Real-world applications typically involve much greater complexity, implying that the influence of input length may be even more pronounced in practice.

Another way context can rot: the model latches onto a wrongheaded idea and it warps choices downstream. When that happens, the best move is often to clear the context and rebuild the scaffolding. The catch is it isn’t computable—you have to notice it. It takes you, the engineer, to spot when the model has spun up a little conspiracy theory and decide when to hit reset.

Occasionally, Claude Code will on its own realize it’s chasing its tail—going down the proverbial rabbit hole—and bail on a particular line of debugging inquiry, say. That’s also your cue to consider pruning the context it just generated.

I thought the game-play explanation of context provided by Anthropic was a great introduction to context and its role in the agentic framework:

For a deeper dive, here are a few useful links on context management from Anthropic:

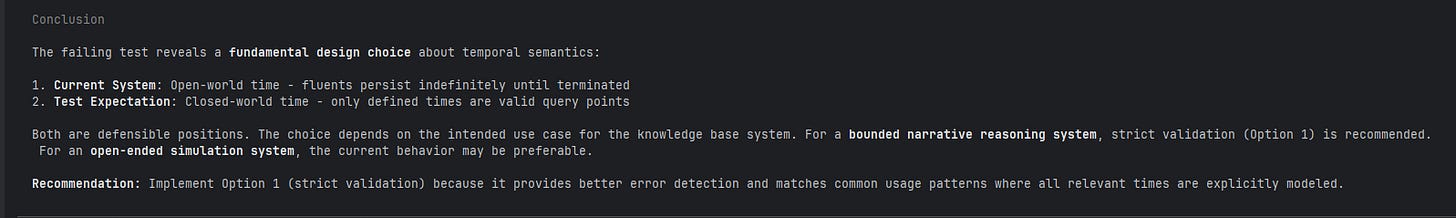

My Claude Code Prolog logic-engine experiment is going great. In true test-driven, fly-by-wire fashion, the current 1,800-test suite is my cockpit view—and it’s giving me real confidence. Hopefully, the worst hump is behind me (until the next big thing!). For now, I get to enjoy the esoterica—temporal semantics and other fun corners of the system (see figure).

P.S. I went with Option 1.