AI on EC2 Over Easy (Part I)

Fine tuning LLMs on Amazon Webservices EC2

Recently, I started using Amazon Web Services (AWS) SageMaker to support my Large Language Model (LLM) experimentation. A few weeks ago, despite being cautious, I incurred charges over $50 from a mistake — I inadvertently launched a second instance while debugging a code/API code edge case. After that experience, I moved my code-centric LLM model explorations onto AWS EC2 Virtual Machines. When working at the command line, I have more control when tinkering/experimenting with low-level code APIs. Once they mature, I plan to move them to SageMaker to use the high-level Machine Learning (ML) APIs and presentation features.

This post collects a few thoughts on the setup and performance of Python scripts on an EC2 instance. The scripts were originally discussed here:

A Fast Flight of Small Language Models on a Vanilla Laptop (substack.com)

I have an AWS account, which I discussed at the link below:

Setting up an EC2 instance was straightforward using Amazon’s instructions. However, given the many options and configurations available, it took me a while to read through them and digest my choices. The good news is that creating and destroying instances (if they don’t turn out to be just right or are no longer needed) is easy. As well as you can create more than one instance. You incur expenses when you run the instances, not by creating them; thus, having more than one instance from which you choose as needed is reasonable. My strategy is to prototype and develop on inexpensive instances and “rent” the expensive, powerful instances for “real” training runs once the scripts and algorithms have been debugged.

For this experiment, I used a fairly low-end 1 GPU (16 GiB - see Appendix) and 4 vCPU platform (g4dn.xlarge) that I rented for about $0.50 an hour. I chose the “Deep Learning OSS Nvidia Driver AMI GPU PyTorch 2.0.1” configuration; the OS is Linux. My client is a consumer Windows 11 Laptop; I interacted with the instance (which runs in the cloud in Virginia) in two ways:

Using ssh/scp over a Windows Linux Subsystem- Version 2 (WSL2) console.

Using Amazon Connect web interface (Figure 1 illustrates the login banner).

This instance had CUDA-enabled pre-installed PyTorch. If you train an LLM using GPU-enabled PyTorch, your model/ data (“slug”) must fit onto that GPU. You cannot switch to using just the CPU without reinstalling a CPU-specific version of PyTorch. PyTorch documentation:

PyTorch documentation — PyTorch 2.2 documentation

Thus, I limited myself to the 350 million parameter LLM OPT model discussed in my earlier write-up, as its slug could fit on the GPU. I would have liked also to test the Phi2 model; however, it would not fit on this instance’s GPU. I plan to use a more powerful instance for the Phi2 experiments. For more information on these Open Source LLMs:

Phi-2 model - available from Hugging Face - 2.7 billion parameters.

Meta AI’s OPT-350m - available from Hugging Face - 350 million parameters. Reference OPT: Open Pre-trained Transformer Language Models.

This experiment was straightforward. It was an exercise to get up to speed with running LLM training scripts on EC2. In this case, I developed the scripts on my laptop and copied them to EC2 for execution. I also ran them on my laptop for comparison.

The test script used was based on the following code:

def opt_setup():

import time

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers import BitsAndBytesConfig

from transformers import pipeline

# Measure start time

start_time = time.time()

from transformers import AutoModelForCausalLM, AutoTokenizer

from datasets import load_dataset

from trl import SFTTrainer, DataCollatorForCompletionOnlyLM

print(f"Is cuda available? {torch.cuda.is_available()}")

data_files="train.jsonl"

# load jsonl dataset

dataset = load_dataset("json", data_files=data_files, split="train")

# Select base_model by the model to be used.

base_model = "facebook/opt-350m"

model = AutoModelForCausalLM.from_pretrained(base_model, trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(base_model, trust_remote_code=True)

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

print("Base Model: {}".format(base_model))

def formatting_prompts_func(example):

output_texts = []

for i in range(len(example['instruction'])):

text = f"### Question: {example['instruction'][i]}\n ### Response: {example['response'][i]}"

output_texts.append(text)

return output_texts

response_template = " ### Response:"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)

# PEFT related

from trl import SFTTrainer

from peft import LoraConfig

peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",

)

# X PEFT related

trainer = SFTTrainer(

model,

train_dataset=dataset,

formatting_func=formatting_prompts_func,

data_collator=collator,

max_seq_length=2048,

packing=False,

peft_config=peft_config # PEFT RELATED

)

trainer.train()

# Save the fine-tuned model locally

trainer.save_model("./saved")

# Measure end time

end_time = time.time()

# Calculate the duration

duration = end_time - start_time

print(f"The function opt_setup took {duration} seconds to complete.")

The above code uses training data to fine-tune an OPT-350m LLM using Parameter-Efficient Fine-Tuning (PEFT). PEFT limits fine-tuning changes to a small set of adapters used with the model. PEFT has benefits, including memory and compute efficiency, and there might be model performance/stability advantages. A good introduction to PEFT can be found here:

Parameter-Efficient Fine-Tuning using 🤗 PEFT (huggingface.co)

The training data consisted of 1,200+ lines of JSON. Each instruction-response entry (over 200) consisted of up to several paragraphs of text. The total JSON file size was about 140KB of cleartext data.

Discussion

Fine-tuning an OPT-350m LLM using Parameter-Efficient Fine-Tuning (PEFT) departs from my previous write-up (see earlier link).

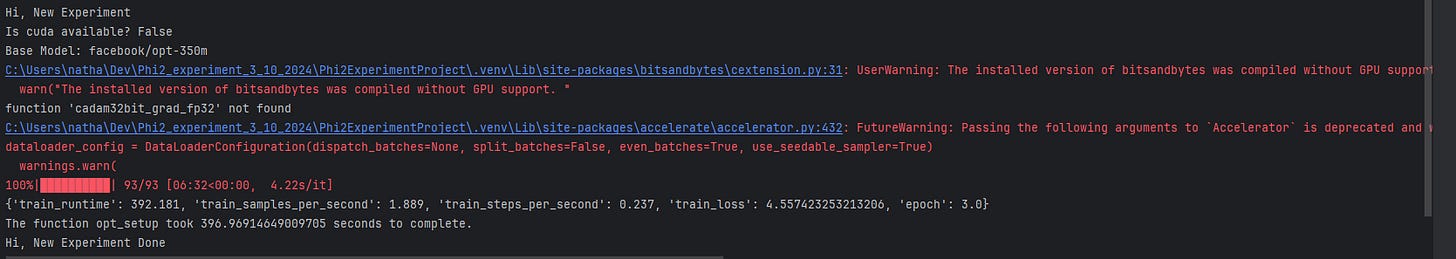

Figures 2-5 illustrate the following outcomes with this model using the parameter set provided by the code sample:

Fine-tuning with or without PEFT was nearly 20 times faster on the EC2 than on the laptop. Most of that speed-up was from the GPU.

Fine-tuning with PEFT was nearly twice as fast as fine-tuning the entire model.

Using EC2 for this experiment was easy and useful. The ability to size and create instances for the training task is very attractive—you only pay for more expensive, powerful virtual platforms when needed.