The Death of Prompt Engineering (And Was Carl the Murderer)?

How in the coding domain AI collaboration feels like it has evolved from prompt engineering to architectural decisions

A Small but Concrete Milestone

Several nights ago, I hit a small but important milestone in an experiment I’ve been carrying around for weeks: a Python-based logic programming engine (a Prolog in miniature) built for interactive fiction. It’s a first-step milestone, but it mattered: after four weeks of building stuff, the engine actually solved something — in this case, a classic detective puzzle where the system identified the murderer through witness contradictions. That felt refreshingly concrete. (Details at the end of the post.)

Prompt Anxiety, in a Good Way

But right as that landed, I got a note asking me what I thought about prompts. It made me weirdly angsty — in a good way — and forced me to reflect on how my relationship with AIs has changed, especially over the last four weeks. For almost a year, I lived in the cut-and-paste world of prompt engineering and endless tuning while working on my Bad Science Fiction experiments. That was necessary then: finesse and hand-crafted prompts were the tools of the trade. There’s plenty of prompt talk in those posts, but looking back, it feels increasingly deprecated.

What Still Holds True About Prompting

That said, some prompting truths still hold. Be clear and consistent with terminology. When you need an LLM to interpret a specialized algorithm or domain precisely, be explicit about every assumption. And when a task truly needs a carefully detailed one-shot prompt, I often iterate with an LLM to draft the prompt before handing it to another (or the same) model — an LLM is great at calling out additional specification and detail you might have missed. Lately, I’ve been iterating with GPT-5 to help craft the (rare) complex prompt for Claude Code — it’s a useful two-stage workflow.

From Prompts to Context

Yet I feel like the rules are shifting. Toward the end of my Bad Science Fiction period, collaborating with AI stopped being primarily about engineered phrasing and became more about context — the skill was the professional-grade presentation of information, so that the model could effectively exploit it.

Now, working with agentic systems with coding, I feel even further unmoored from those old habits. I spend less time obsessing over wording and reading code and more time directing the agent and getting smart about big-picture technical and algorithm choices. For me, the craft has moved from “how to ask” to “what to build together.”1

Why the Shift?

Largely because Claude Code works directly against my repository. It already has the full context of the codebase, so I don’t need to write anything up for it.2 And since tasks are best handled in smaller pieces, I rarely ask the agent to take big leaps that require a lot of detailed explaining — it’s almost always better to break large tasks into smaller ones and iterate together, adapting one step at a time.

My role has shifted from prompt-crafting to something more attentional and directional: I tell Claude what to focus on and in which direction to head, and then I ask for—and evaluate—an analysis before it commits to action. Through that back-and-forth, tuning happens naturally in the interaction itself, not in the wording upfront.

That’s why, with today’s agentic AI, I feel less tethered to “getting the prompt right” and more invested in steering the collaboration. The work is no longer about writing perfect instructions; it’s about asking the right questions, keeping sight of the bigger technical picture, and deciding what’s worth building in what order.

Coding by Wire

The last couple of weeks have felt pretty grindy — more like AI babysitting at times. We wrestled with deep-seated bugs and accepted a few architectural compromises that will need to be revisited. But I wanted to hit a milestone before diving back into those fundamentals. Sometimes it’s better to stay the course, notch a win, and circle back with a clearer purpose.

More and more, the work feels like coding-by-wire: the AIs move the control surfaces while I watch the instruments, keeping an eye on the horizon to make sure we’re still on course.

And that’s where I’ve landed. These days, my interactions with the AI are less about prompts and more about architectural poker — knowing when to hold the line on a compromise, when to fold a bad idea, when to walk away from a dead end, and when to run to a milestone.

Choosing the Ground

In that light, I sometimes caught myself second-guessing. Should I drill down into the details? Spend more time on prompt crafting—micromanaging decisions—and proofreading?

Should I be saying more to Claude?

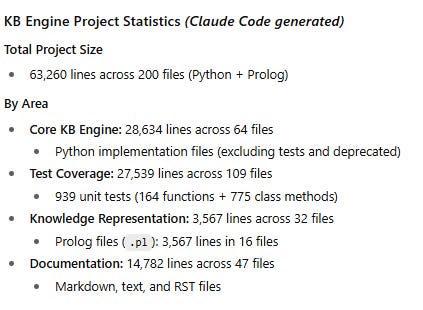

I didn’t. And we still got it done. In hindsight, I think my hunch was right: my job was to choose the ground — to keep hold of the big picture, to line up the development so the features and bugs would topple like dominoes, and not get lost in the weeds. Because honestly, there were a lot of weeds, and I wouldn’t have been much use down in them. Consider:

If I sound circumspect, I’m not. I’ve always wanted to build a logic engine and use it to explore interactive fiction projects and games. There’s still a way to go before this project is capped, but without a doubt, what Claude, GPT-5, and I pulled off in four weeks of part-time effort would have been impossible to achieve in ten times that span just a few years ago. If I sound a little winded, it’s only because I’m impressed...

Miles (yet) to go, and I’ll report in on how that goes. Hopefully as well.

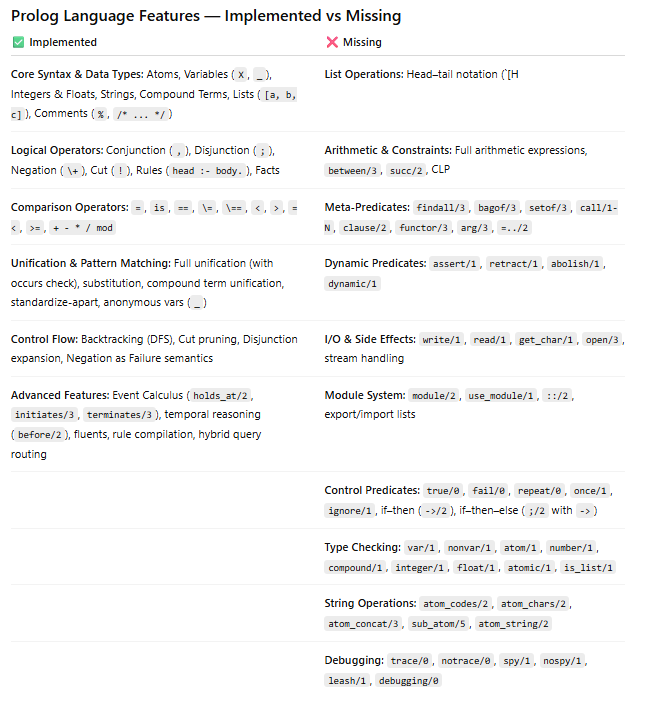

I should clarify one detail. I’ve been using Prolog and logic programming interchangeably. What I have now isn’t a full Prolog implementation — that’s aspirational. Right now, it’s a logic programming language that implements a subset of Prolog’s features. Claude did a good job of drawing out the distinction between the two (Implemented vs. Missing), see the figure below.

In the weeks ahead, I’ll be delving deeper into lessons and posting updates here — so stay tuned. Once I’m past the foundational logic programming work, I’ll move on to interactive fiction (and I can’t wait).

As for last night’s milestone: I took Annie Ogborn’s Detective Puzzle solution and translated it into a form compatible with my engine. Since head–tail list notation [H|T] isn’t implemented yet, I substituted predicate-based representations instead:

% Murder Mystery Logic Puzzle - Simplified for KB Engine

% Adapted from: https://github.com/Anniepoo/prolog-examples/blob/master/newdetective.pl

% One of three suspects is a murderer who lies; the others tell the truth

% Witness testimony as simple facts

testimony_town(art, burt, out).

testimony_rel(art, burt, friend).

testimony_rel(art, carl, enemy).

testimony_town(burt, burt, out).

testimony_rel(burt, burt, stranger).

testimony_town(carl, art, in).

testimony_town(carl, burt, in).

testimony_town(carl, carl, in).

% Domain values

town_val(in).

town_val(out).

rel_val(friend).

rel_val(enemy).

rel_val(stranger).

% Suspects

suspect(art).

suspect(burt).

suspect(carl).

% Check for contradictions between witnesses

% Art and Burt agree that Burt was out of town

agrees_burt_out(art).

agrees_burt_out(burt).

% Carl disagrees - says Burt was in town

disagrees_burt_out(carl).

% A witness is lying if they contradict the majority

% Carl contradicts both Art and Burt about Burt's location

contradicts_majority(carl).

% The murderer is the one who lies

murderer(X) :- contradicts_majority(X).

% Truth values based on the majority

truth_town(burt, out).

truth_town(art, in).

truth_town(carl, in).

truth_rel(burt, friend).

truth_rel(carl, enemy).

truth_rel(burt, stranger).

% Check consistency for verification

consistent_with_truth(art) :-

testimony_town(art, burt, out),

truth_town(burt, out).

consistent_with_truth(burt) :-

testimony_town(burt, burt, out),

truth_town(burt, out).Then, to verify that the engine could solve it, Claude and I wrote a unit test around it (shown below). The line highlighted in bold is where the murderer is confirmed by the test.

"""Test murder mystery logic puzzle implementation.

This test validates the KB engine's ability to handle logic puzzles

involving witness testimonies and contradiction detection.

"""

import pytest

import os

import sys

from pathlib import Path

# Path setup for runtime import

_kb_root = Path(__file__).parent.parent.parent

if str(_kb_root) not in sys.path:

sys.path.insert(0, str(_kb_root))

# Import with IDE support via local stub file

from kb_engine import KBEngine

class TestMurderMystery:

"""Test suite for murder mystery logic puzzle."""

@pytest.fixture(scope="class")

def mystery_kb(self):

"""Create a KB engine with murder mystery rules loaded."""

engine = KBEngine()

mystery_file = Path(__file__).parent / "murder_mystery" / "murder_mystery_simple.pl"

if mystery_file.exists():

engine.consult(str(mystery_file))

return engine

def test_testimony_facts_loaded(self, mystery_kb):

"""Test that witness testimonies are correctly loaded."""

# Art's testimonies

result = mystery_kb.query("testimony_town(art, burt, out)")

assert len(result) > 0, "Art's testimony about Burt being out should be loaded"

# Carl's testimonies

result = mystery_kb.query("testimony_town(carl, burt, in)")

assert len(result) > 0, "Carl's testimony about Burt being in should be loaded"

def test_suspects_defined(self, mystery_kb):

"""Test that all suspects are properly defined."""

suspects = ["art", "burt", "carl"]

for suspect in suspects:

result = mystery_kb.query(f"suspect({suspect})")

assert len(result) > 0, f"{suspect.title()} should be defined as a suspect"

def test_contradiction_detection(self, mystery_kb):

"""Test that the system correctly identifies who contradicts the majority."""

result = mystery_kb.query("contradicts_majority(X)")

assert len(result) == 1, "Exactly one person should contradict the majority"

assert result[0]['X'] == 'carl', "Carl should be identified as contradicting the majority"

def test_murderer_identification(self, mystery_kb):

"""Test that the murderer is correctly identified."""

result = mystery_kb.query("murderer(X)")

assert len(result) == 1, "Exactly one murderer should be identified"

assert result[0]['X'] == 'carl', "Carl should be identified as the murderer"

def test_consistency_with_truth(self, mystery_kb):

"""Test that truth-tellers are consistent with established truth."""

# Art should be consistent (truth-teller)

result = mystery_kb.query("consistent_with_truth(art)")

assert len(result) > 0, "Art should be consistent with the truth"

# Burt should be consistent (truth-teller)

result = mystery_kb.query("consistent_with_truth(burt)")

assert len(result) > 0, "Burt should be consistent with the truth"

def test_logic_chain(self, mystery_kb):

"""Test the complete logical chain of the puzzle."""

# Step 1: Verify testimonies exist

art_testimony = mystery_kb.query("testimony_town(art, burt, out)")

burt_testimony = mystery_kb.query("testimony_town(burt, burt, out)")

carl_testimony = mystery_kb.query("testimony_town(carl, burt, in)")

assert all([art_testimony, burt_testimony, carl_testimony]), \

"All key testimonies should exist"

# Step 2: Verify agreement pattern

agrees_result = mystery_kb.query("agrees_burt_out(X)")

assert len(agrees_result) >= 2, "At least two people should agree about Burt"

# Step 3: Verify disagreement

disagrees_result = mystery_kb.query("disagrees_burt_out(carl)")

assert len(disagrees_result) > 0, "Carl should disagree about Burt's location"

# Step 4: Verify conclusion

murderer = mystery_kb.query("murderer(X)")

assert murderer[0]['X'] == 'carl', "Logical chain should lead to Carl as murderer"

@pytest.mark.parametrize("witness,location,expected", [

("art", "burt", "out"),

("burt", "burt", "out"),

("carl", "burt", "in"),

("carl", "art", "in"),

("carl", "carl", "in"),

])

def test_individual_testimonies(self, mystery_kb, witness, location, expected):

"""Test individual witness testimonies parametrically."""

result = mystery_kb.query(f"testimony_town({witness}, {location}, {expected})")

assert len(result) > 0, f"{witness}'s testimony about {location} being {expected} should exist"

class TestMurderMysteryEdgeCases:

"""Test edge cases and error conditions for murder mystery puzzle."""

def test_missing_testimony_handling(self, kb_overlay):

"""Test behavior when testimonies are incomplete."""

kb = kb_overlay("""

% Incomplete testimonies

testimony_town(art, burt, out).

% Missing other testimonies

suspect(art).

suspect(burt).

% Simple contradiction logic

contradicts_majority(X) :-

suspect(X),

\\+ testimony_town(X, _, _).

""")

# Should handle missing testimonies gracefully

result = kb.query("contradicts_majority(X)")

assert result is not None, "Should handle missing testimonies without error"

def test_unanimous_testimony(self, kb_overlay):

"""Test case where all witnesses agree (no liar)."""

kb = kb_overlay("""

testimony_town(art, location, in).

testimony_town(burt, location, in).

testimony_town(carl, location, in).

suspect(art).

suspect(burt).

suspect(carl).

agrees_all(X) :- suspect(X), testimony_town(X, location, in).

contradicts_majority(X) :- fail.

""")

# No one should contradict when all agree

result = kb.query("contradicts_majority(X)")

assert len(result) == 0, "No contradictions when all testimonies agree"

if __name__ == "__main__":

"""Run tests directly when script is executed."""

import pytest

import sys

# Run pytest on this file

exit_code = pytest.main([__file__, "-v"])

sys.exit(exit_code)When the unit test was run, it produced the following output, verifying that the tests were successful (and Carl, the murderer, was revealed).

============================= test session starts =============================

platform win32 -- Python 3.12.4, pytest-8.4.1, pluggy-1.6.0 -- C:\Users\natha\Dev\GITHUB_DESKTOP\BSF2-IF-Experimental\src\.venv\Scripts\python.exe

cachedir: .pytest_cache

hypothesis profile 'default'

rootdir: C:\Users\natha\Dev\GITHUB_DESKTOP\BSF2-IF-Experimental\src\kb

configfile: pytest.ini

plugins: hypothesis-6.138.8, cov-6.2.1, timeout-2.4.0, xdist-3.8.0

timeout: 300.0s

timeout method: thread

timeout func_only: False

collecting ... collected 13 items

test_murder_mystery.py::TestMurderMystery::test_testimony_facts_loaded PASSED [ 7%]

test_murder_mystery.py::TestMurderMystery::test_suspects_defined PASSED [ 15%]

test_murder_mystery.py::TestMurderMystery::test_contradiction_detection PASSED [ 23%]

test_murder_mystery.py::TestMurderMystery::test_murderer_identification PASSED [ 30%]

test_murder_mystery.py::TestMurderMystery::test_consistency_with_truth PASSED [ 38%]

test_murder_mystery.py::TestMurderMystery::test_logic_chain PASSED [ 46%]

test_murder_mystery.py::TestMurderMystery::test_individual_testimonies[art-burt-out] PASSED [ 53%]

test_murder_mystery.py::TestMurderMystery::test_individual_testimonies[burt-burt-out] PASSED [ 61%]

test_murder_mystery.py::TestMurderMystery::test_individual_testimonies[carl-burt-in] PASSED [ 69%]

test_murder_mystery.py::TestMurderMystery::test_individual_testimonies[carl-art-in] PASSED [ 76%]

test_murder_mystery.py::TestMurderMystery::test_individual_testimonies[carl-carl-in] PASSED [ 84%]

test_murder_mystery.py::TestMurderMysteryEdgeCases::test_missing_testimony_handling PASSED [ 92%]

test_murder_mystery.py::TestMurderMysteryEdgeCases::test_unanimous_testimony PASSED [100%]

============================= 13 passed in 0.93s ==============================I’ll close with a few Substack posts that trace the journey to this point, shared below in chronological order. If you find them interesting and want more depth, you can also browse my Substack Notes from the same period.

Code, Tests, and Flashlights: Latest Notes from a Prolog Engine Experiment. September 7.

Oh Come On! Notes From An Experimental Interactive Fiction and AI Software Project. August 29.

Fly-By-Wire Coding with AI. August 22.

That said, I make considerable use of the Claude.md file — a kind of working contract and collaboration guide between me and Claude Code. You can think of it as a “system prompt” of sorts, but with an important difference: it isn’t tuned for specific interactions. Instead, it functions as a broader guide, shaping the overall way we work together.

One way to think about it is this: the meta-knowledge and structure that used to live in the prompt now lives in the project itself — in the codebase, its modules, its documentation, and the code. A well-refactored, modularized project becomes the real “prompt,” making it easier for Claude Code to navigate and reason about the system.

In other words, clean code has become the new prompt engineering. The better organized the project, the less you need to spell things out — the structure and documentation do the heavy lifting.